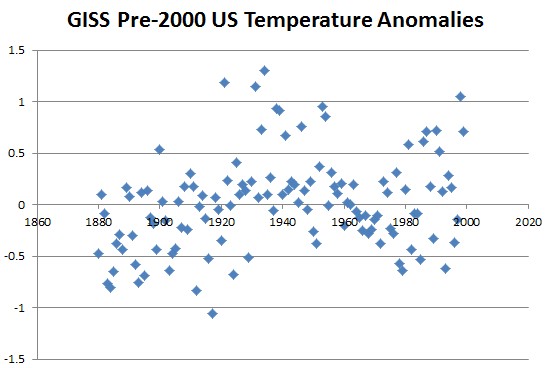

Michael Hammer has found the original uncorrupted GISS US temperature data on John Daly’s web site. Prior to GISS/USHCN perverting the data set in the year 2000, the 1930s was the hottest decade.

Jennifer Marohasy » How the US Temperature Record is Adjusted

www.john-daly.com/usatemps.006

The data was originally here : http://www.giss.nasa.gov/data/update/gistemp/graphs/FigD.txt

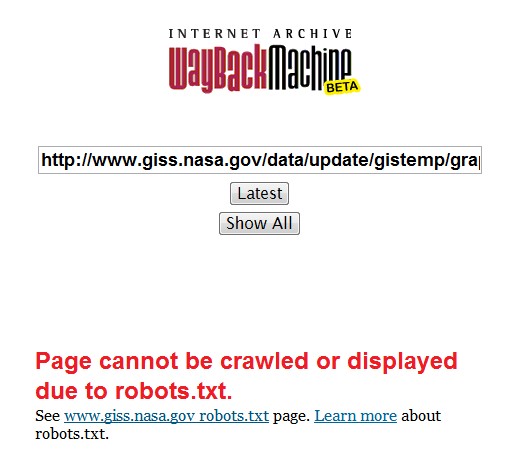

If you try to go to that page, you get this :

Not Found

The requested URL /gistemp/graphs_v3/FigD.txt was not found on this server

So I went to the web archive, to look for an archived copy of the data on the GISS web site.

http://wayback.archive.org/web/*/http://www.giss.nasa.gov/data/update/gistemp/graphs/FigD.txt

This is what you get there :

Here is what www.giss.nasa.gov robots.txt looks like. Hansen is blocking the web archive from searching GISS data, selected meetings and his publications.

User-agent: *

Disallow: /calendar/

Disallow: /cgi-bin/

Disallow: /data/

Disallow: /dontgohere/

Disallow: /gfx/

Disallow: /internal/

Disallow: /lunch/

Disallow: /meetings/arctic2007/pdf/

Disallow: /meetings/arctic2007/ppt/

Disallow: /meetings/pollution2002/present/

Disallow: /meetings/pollution2005/day1/

Disallow: /meetings/pollution2005/day2/

Disallow: /meetings/pollution2005/day3/

Disallow: /meetings/pollution2005/posters/

Disallow: /meetings/lunch/

Disallow: /rp/

Disallow: /tools/modelE/call_to/

Disallow: /tools/modelE/modelEsrc/

Disallow: /tools/panoply/docs/projections/

Disallow: /tools/panoply/help/projections/

Disallow: /~crmim/publications/

Disallow: /~jhansen/

Disallow: /staff/mmishchenko/publications/

Disallow: /staff/jhansen/

Disallow: /staff/img/User-agent: discobot

Disallow: /

I think you are misreading the word “disallow”. Instead of dis-allow, read it as di-sallow

Sallow = “an unhealthy yellowish color”.

Cowards. Double cowards

Criminal.

I think a FOI request needs to be submitted. Some of the other folders look interesting too.

That’s only for robots. Nothing is hidden when using a browser.

http://www.giss.nasa.gov/staff/jhansen/

I think you are completely missing the point.

Ok, but you can’t browse a website like a ftp site. A missing link or a typo in the file name and you can’t find the file even if it is there.

Using Google with “gistemp site:www.giss.nasa.gov” reveals 328 results

“meetings+pollution2002 site:www.giss.nasa.gov” reveals 133 results

Was the robots.txt recently put in place?

Have you looked on their ftp site? ftp://ftp.giss.nasa.gov/pub/

Maybe it’s there. This is usually the place for sharing data.

http://www.giss.nasa.gov/data/update/gistemp/graphs/FigD.txt

is on the server linked to

http://data.giss.nasa.gov/gistemp/graphs_v3/FigD.txt

This means that they have used a Symbolic Link for a whole directory or an individual file and I can tell you that the sys admin could have messed up the link or the access permission. I’ve seen this a lot..

It’s a bit strange that the “/data/update/” and the “data/update/gistemp” have a different destination.

http://www.giss.nasa.gov/data/update/

linked to

http://data.giss.nasa.gov/update/

http://www.giss.nasa.gov/data/update/gistemp

inked to

http://data.giss.nasa.gov/gistemp/

The wayback machine and all other internet archives can only archive what their crawlers are allowed to find. Hansen keeps them from archiving versions of his graphs, making it impossible to compare versions of his website later.

You can of course, as a human, regularly go there and save snapshots manually. But you would have to do this over and over again before anything happens in order to be able to prove the manipulations.

I should point out here that there is nothing preventing a web bot from archiving the entire GISS site except the _convention_ of complying with the restrictions laid out in the robots.txt file; if you wanted to obtain an archive of the site, you could obtain the source code to a web bot, remove or comment out either the code that reads the robots.txt file from a site or the code that matches URLs against the list of folders in that file, recompile it, and point it at the GISS website to obtain a complete copy of the site.

The file I am interested in has been deleted.

Reblogged this on Climate Ponderings.

Thanks for this! Last year I looked and looked for it, without success.

However, the data that John Daly archived appears to be newer than the data in the 1999 graph. If you compare the 1934 and 1998 peaks in the 1999 graph, you can see that 1934 was about 0.6 C warmer than 1998.

But in that copy of the data from John Daly’s site, 1934 is only 0.25 C warmer than 1998. (1998 is 4th-warmest, behind 1934, 1921, and 1931.) So, already, by the time that John Daly archived that file, about 0.35 C of cooling had been erased. If anyone knows where to find the earlier version, in which 1934 was about 0.6 C warmer than 1998, as shown in that 1999 graph, I’d be grateful for a copy!

Here’s another version of the data from John Daly’s site. It appears to be from later in year 2000, or perhaps early 2001, and another 0.21 C of warming has been added for the 1934-to-1998 interval, leaving 1934 just 0.04 C warmer than 1998.

Note: In the latest version of the data (and a recent graph), 1934 is 0.078 C cooler than 1998.

I wrote, “In the latest version of the data (and a recent graph), 1934 is 0.078 C cooler than 1998.”

The trend continues: in the version of the U.S. 48-State Surface Air Temperature anomaly data that I downloaded from NASA GISS today, 1934 is 0.1231 °C cooler than 1998.

All the versions I’ve found or reconstructed are here:

http://sealevel.info/GISS_FigD/

When you’ve committed so much social, political, and financial profit in these debased versions of reality, then it no surprise that earlier (honest and clean) versions are hidden and protected, or even erased.

Let’s hope that more knowledgeable and far smarter sources than ourselves will expose Hansen in all his glorious detail.

DirkH notes: “You can of course, as a human, regularly go there and save snapshots manually. But you would have to do this over and over again before anything happens in order to be able to prove the manipulations.”

I believe Steven Goddard has given motivated readers here just the incentive needed to do so. Thank you Steve.

This link works, but maybe it’s not what you’re looking for?

http://web.archive.org/web/20060110100426/http://data.giss.nasa.gov/gistemp/graphs/Fig.D.txt

Nope, wrong URL. That is the corrupted data.

I’m not sure which vintage this is: http://data.giss.nasa.gov/gistemp/graphs_v3/Fig.D.txt. But if you compare it to http://data.giss.nasa.gov/gistemp/graphs_v2/Fig.D.txt. There are changes.

If a government worker deliberately (or even “mistakenly”) tampers with public records information, that person is a criminal. I seem to recall a certain WH occupant resigning over a mere 18 minutes of “mistakenly erased reel-to-reel tape”. James “Algore’s Sockpuppet” is light years ahead of that certain WH occupant, when it comes to criminally destroying and/or altering public records. We can only hope, for the sake of our children’s futures, that Hansen and the rest of his criminal cohorts are stopped and imprisoned before they completely destroy not only our economy (and take away our individual liberties), but the rest of the world’s.

Thank you for all you do, Mr. Goddard. You will go down in history as one of the great defenders of truth and science.

robots.txt only tells search bots what not to index. It does not set permissions on files… There’s no conspiracy there mate. The rest is interesting though.

Moron alert.

The good news is that you, Steve, apparently embarrassed GISS into reviseing their robots.txt to be less restrictive.

They’d had that restrictive robots.txt file there for years. But after you posted this article on 6/11/2012, it was less than 17 days before they fixed it. Here’s what it looked like 17 days later:

https://web.archive.org/web/20120628024837/http://data.giss.nasa.gov/robots.txt

Unfortunately, the old robots.txt prevented archiving a lot of material, before they changed it. Even the old robots.txt, itself, wasn’t archived, which is presumably why you get this error:

https://web.archive.org/web/20120611030727/http://data.giss.nasa.gov/robots.txt

It stinks to high heaven that they ever created such a robot.txt file. The obvious question is, what where they hiding?

Well, I spoke too soon. It turns out they tricked me (briefly).

I downloaded all of the old NASA GISS U.S. Surface Temperature Anomaly files I could find, compared them to eliminate the duplicates, organized them into a table, and put them on my server, here:

http://sealevel.info/GISSFigD/ (No warranty is expressed or implied.)

There are two interesting things to notice.

First, see the footnote at the bottom about “time travel.”

Really! In both 2011 and 2012, GISS had average temperature anomalies reported for the full year in August of that same year! That takes “we don’t need no stinking data!” to a whole new level.

Second, note that there have been no copies of this data saved at archive.org since October 3, 2012 (16 months ago).

I wondered why not. There was a new version last January. So why didn’t archive.org save it?

It turns out that the reason for that is that sometime between 9:25am 1/14/2013 and 5:20am 1/15/2013 GISS configured their web server to prevent archiving anything in http://data.giss.nasa.gov/gistemp/.

Sometime in March, 2013, they changed their server configuration; the error seen by archive.org is now different. On 3/14/2013, one successful archive of the main http://data.giss.nasa.gov/gistemp/ page snuck through (probably while they were in the process of changing their server configuration), but, unfortunately, not the data. Since then their server has blocked every access attempt from archive.org.

You can still view the current version in a normal web browser, but Archive.org, CiteBite.com and WebCitation.org all fail when trying to archive the file.

Here’s what WebCitation reported when I tried to use it to save the current Fig.D.txt:

In fact, even wget on my own computer fails, with a “403 Forbidden” error! Here’s what happens:

To download an end-of-year 2013 copy of Fig.D.txt for my table, I had to manually save it from within a web browser.

This behavior could not be accidental. GISS has intentionally configured their web server prevent their (our!!!) data from being archived.

I think it will be necessary to spoof a regular browser in order to automate downloading those files.

Steve, my guess is that, as a result of your 6/11/2012 blog article, about GISS using robots.txt to prevent archiving of their data, GISS was ordered by somebody higher-up to stop doing that. So they cheated: they changed their robots.txt to make it appear that they no longer block archive.org, but they actually just configured their web server to do the blocking, instead.

Here’s their current robots.txt:

See, their robots.txt now says to block their /pub and /outgoing folders from being archived (why?), but it now looks like they allow archive.org to archive most of the rest of their site, including /gistemp/. I’d bet money that’s because of the stink you made, Steve. Somebody probably issued an edict: “stop using robots.txt to block archive.org!”

So they pulled a dirty trick. They changed robots.txt to no longer show that archive.org is blocked, but they configured their server to do the blocking, instead. The last successful archive of Fig.D.txt by archive.org was at 3:20am EDT on 10/3/2012. Since then their server has been blocking access with “403 Forbidden” errors.

Here’s what archive.org sees now:

http://wayback.archive.org/web/20130607084126/http://data.giss.nasa.gov/gistemp/graphs_v3/Fig.D.txt -> “Access denied.”

This amazes me. I really am surprised at how blatant their misbehavior is. They’re absolutely shameless. I’m becoming convinced that the guys running GISS are just plain crooks. If I’d given an order that they cease blocking archive.org with robots.txt, and I subsequently discovered this subterfuge, I’d fire somebody so fast there would be skid marks on the sidewalk outside the front door where their butt hit the concrete.

That has me thinking of the old saw: “Never attribute to malice that which is adequately explained by stupidity”.

One could speculate (borrowing CAGW logic to do so.) that based upon these epic levels of stupidity evidenced at NOAA and NASA, that stupidity must be caused by government climatology grants.

Quote – I think it will be necessary to spoof a regular browser in order to automate downloading those files.

There are tools (web scrapers) that will do the job of downloading.

I use this one for my interests.

http://www.heliumscraper.com/en/index.php?p=home

Told ya to keep ALL the data used previously. This is time for Federal Police Intervention. All GISS records should be seized and impounded. BTW Hansen ain’t there anymore its dear ol Gavin. They would be mightlly Pxxxxd off with your site and trying to prevent ANY access to previous data.

These talented folks at NASA Goddard Institute for Space Studies have just confirmed serious charges made on 17 July 2013 to the Congressional Space Science and Technology Committee

https://dl.dropboxusercontent.com/u/10640850/Creator_Destroyer_Sustainer_of_Life.pdf

Prof. Manuel, as interesting as your neutron repulsion material might be (if I could understand it), it seems off-topic here. It really doesn’t seem to have anything to do with GISS blocking access to archived data and Hansen’s writings, nor anything else we’ve been talking about.

If NASA GISS is now backing your theories, that’s interesting, and I can certainly understand you being excited about it. But I don’t see anything about that in the document at the dropbox link you provided, and google finds nothing, either:

http://www.google.com/search?q=neutron+repulsion+site%3Agiss.nasa.gov

BTW, I archived your document here: http://www.webcitation.org/6S6puQWNm

Guess what? NOAA is does it, too.

A little over three years ago I was unhappy about one of the adjustments which NOAA was doing, to “homogenize” temperature data, and I wrote this:

https://archive.today/9EBUh#selection-2135.0-2223.141

The NOAA web page to which that refers described a procedure in which satellite night-illumination data was used as a proxy for urban warming, to adjust measured temperature data. I thought that was very crude, and highly questionable.

However, NOAA has changed the web page, and that material is gone, now.

No problem, right? Just use archive.org to view the old version, right?

Wrong. NOAA uses robots.txt to prevent archiving of their pages, so archive.org doesn’t have the old version:

http://wayback.archive.org/web/*/http://www.ncdc.noaa.gov/oa/climate/research/ushcn/

http://sealevel.info/noaa_blocking_wayback_machine.png

Here’s the http://www.ncdc.noaa.gov/robots.txt file:

User-agent: *

Disallow: /*.csv

Disallow: /*.dat

Disallow: /*.kmz

Disallow: /*.ps

Disallow: /*.txt

Disallow: /*.xls

Disallow: /applet/

Disallow: /ASHRAE/

Disallow: /atrac/*?

Disallow: /cag/time-series

Disallow: /c/

Disallow: /cdo.*

Disallow: /cdo-services/

Disallow: /cdo-web/quickdata

Disallow: /cgi-bin/

Disallow: /common/

Disallow: /conference/

Disallow: /crn/ws/

Disallow: /crn/report/

Disallow: /crn/flex/

Disallow: /crn/error/

Disallow: /crn/data/

Disallow: /crn/new*

Disallow: /crn/*?

Disallow: /crn/*.ws$

Disallow: /crn/*.htm$

Disallow: /crn/graphdoc/

Disallow: /crn/img/

Disallow: /crn/js/

Disallow: /crn/multigraph/

Disallow: /crn/test/

Disallow: /crn/theme/

Disallow: /crn/mon-dashboard.html

Disallow: /doclib

Disallow: /doclib/index.php?

Disallow: /DLYNRMS/

Disallow: /EdadsV2

Disallow: /extremes/cei/*/

Disallow: /extremes/cei/*.php?

Disallow: /extremes/nacem/?

Disallow: /ghcnm/*.php?

Disallow: /gibbs/

Disallow: /hofn*/

Disallow: /homr/services/

Disallow: /i/

Disallow: /i_cdo/

Disallow: /ibtracs/

Disallow: /isis/

Disallow: /img/

Disallow: /imgwms/

Disallow: /IPS/*/

Disallow: /IPS/progress

Disallow: /local/

Disallow: /nacem/NACEMMap?

Disallow: /noaamil/

Disallow: /noaaonly/

Disallow: /notonline/

Disallow: /nwsonly/

Disallow: /nexradinv/

Disallow: /nexradinv-ws/

Disallow: /oa/

Disallow: /paleo/metadata/

Disallow: /prototypes/

Disallow: /records/*.php?

Disallow: /snow-and-ice/extent/*/

Disallow: /snow-and-ice/recent-snow/*/

Disallow: /snow-and-ice/rsi/societal-impacts/

Disallow: /snow-and-ice/snow-cover/*/

Disallow: /societal-impacts/air-stagnation/*/

Disallow: /societal-impacts/csig/*/

Disallow: /societal-impacts/redti/*/

Disallow: /societal-impacts/wind/*/

Disallow: /stormevents/*.jsp?

Disallow: /stormevents/csv

Disallow: /swdiws/

Disallow: /thredds/

Disallow: /teleconnections/*.php?

Disallow: /temp-and-precip/*.php?

Disallow: /temp-and-precip/alaska/*/

Disallow: /temp-and-precip/climatological-rankings/?

Disallow: /temp-and-precip/climatological-rankings/download.xml

Disallow: /temp-and-precip/drought/nadm/nadm-maps.php/?

Disallow: /temp-and-precip/global-temps/*/

Disallow: /temp-and-precip/msu/*/

Disallow: /temp-and-precip/national-temperature-index/*?

Disallow: /temp-and-precip/time-series/?

Disallow: /wct*?

They’re also using server configuration tricks to block webcitation.org from archiving pages.

Such behavior is outrageous, and highly suspicious. That is our data! What do they have to hide?

Go get ’em Dave! Your points are all home runs!!!

Thank you, Tom! Encouragingly, the NOAA NCDC (now NCEI) web page example which I mentioned in my Feb. 4, 2015 comment and showed with the screenshot is no longer blocked:

http://wayback.archive.org/web/*/http://www.ncdc.noaa.gov/oa/climate/research/ushcn/

However, NOAA NCDC is still using their robots.txt to block archive.org for most NCDC data files.

(Digression: But I can’t seem to find the description of how they use(d) satellite night-illumination data as a crude proxy for urban warming when “homogenizing” the temperature data, which I complained about in 2011 — maybe it was in some other document.)

One subtly, which I didn’t realize back in 2015, is that a restrictive robots.txt apparently does not cause archive.org to discard old archived copies, nor even entirely prevent it from archiving new copies. It only prevents it from retrieving old copies. So if the robots.txt file is subsequently removed, or revised to be less restrictive, the old versions of the files might reappear in archive.org’s version history.

So I am hopeful that if the Trump Administration orders NCDC / NCEI, NASA, etc. to stop this misbehavior, then a lot of the apparently lost old data file versions might be found!

At first I thought that NASA GISS had removed the 1999 Hansen paper which included this graph showing U.S. temperatures declining from the 1930s to the 1970s:

http://www.sealevel.info/fig1x_1999_highres_fig6_from_paper4_27pct_1979circled.png

Google still remembers where the paper was:

https://www.google.com/search?q=1999_Hansen_etal_1.pdf+site%3Agiss.nasa.gov

http://sealevel.info/GISS_deletes_Hansen1999_paper_and_robots_dot_txt_abuse.png

But the location which used to contain the paper now gives a 404 page not found error:

https://pubs.giss.nasa.gov/docs/1999/1999_Hansen_etal_1.pdf

Actually, they didn’t remove it, they just renamed it. This is the new link:

https://pubs.giss.nasa.gov/docs/1999/1999_Hansen_ha03200f.pdf

However, notice the note at the bottom of the Google search result: “A description for this result is not available because of this site’s robots.txt”

That’s because GISS has configured their robots.txt to “disallow” that “docs” folder, and three others. Here’s the contents of their robots.txt file:

Now, why would they do that? I think there’s still some swamp-draining needed at NASA!