According to USHCN data, daily maximum temperatures during the summer of 1936 averaged more than seven degrees hotter than the summer of 2012.

U.S. Historical Climatology Network

www.wunderground.com/history/airport/KSNL/

NOAA says 2012 is much hotter, because they are completely FOS.

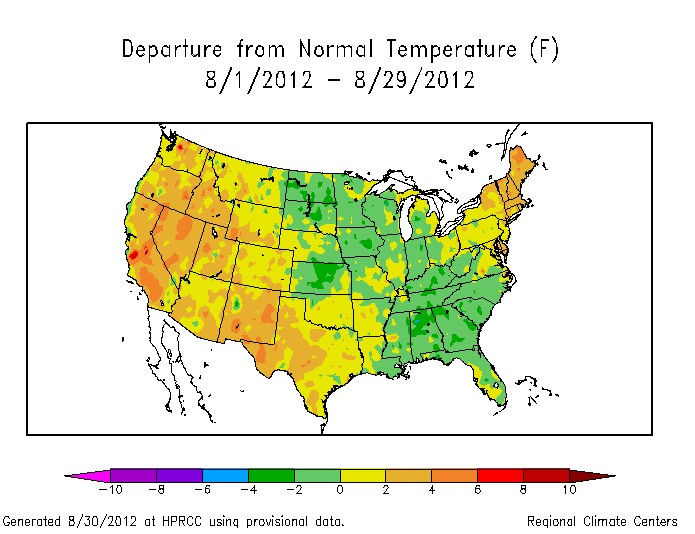

Compare August 1936 to August 2012- below.

http://docs.lib.noaa.gov/rescue/mwr/064/mwr-064-08-c1.pdf

NOAA could go DOWN UNDER to COOL DOWN in something of the past

http://ski.com.au/reports/australia/nsw/perisherblue/index.html

S.H. doesn’t look like GLOBAL warming

http://www.osdpd.noaa.gov/data/sst/anomaly/anomnight.current.gif

Interesting. TOBS can’t be much of an issue when the daily max is consistently high and has little variance. What happened in 2012 with the 8/9 days straight of 92 degrees for the high? Pretty strange.

NOAA reported that July 2012 was hotter than July 1936. NOAA has made no such statements regarding August.

July was 4.7 degrees hotter in 1936

The raw data showed that 1936 was significantly hotter than 2012. I am not interested in mysterious adjustments by people with an obvious agenda, which is nothing but an invitation to cheat.

No doubt all on both sides of the divide have thought all this through but every time I pose this question nobody can answer this so perhaps I have more luck this time.

Thinking through on the possibility that CO2 may be more then a bit player in this primarily western obsession of seeing it as AGW.

The atmosphere filters out a fair amount of incoming short wave radiation/heat of which water vapor is seen as a major contributor to this filter, most if not all other gases being fairly evenly distributed may play a general role but water is unique in its patchy distribution. This is witnessed by for instance the relatively low daytime temperature in the humid parts of the tropics whereas the temperature in low humidity areas at the same latitudes get much higher. In similar fashion the humidity slows down the escape of heat at night hence the tropics have warmer nights and low humidity areas have cold nights. The swings are less dramatic but it works the same in the temperate climates, a day with high humidity does not get as warm and the night not as cold as on a day with low humidity.

So we can conclude that reduced water vapor is a major regulator of temperature.

If I understand it correct it is generally believed that CO2 does not block any incoming radiation but absorbs the radiation as it is coming off the surface. Let’s assume that to be the case.

If that radiation has a temp of say 20C then CO2 can never emit more then that, under the laws of physics there is always a loss in energy so going by this it should be less then 20C.

Half of what it emits is directed back to earth so the models tell us.

So how can half the absorbed energy directed back to earth which can never be higher in temp then what it absorbed to start with be making it warmer. If that is the case then we have just solved the world’s energy problems.

20 in and say for argument’s sake 18 out does not heat the room. No heater that emits less heat then the temp in the room can heat the room.

So that is not it. CO2 does not generate heat by itself and can not warm the air.

Ok, next possibility: it slows down the heat loss much like water vapor does as per above. In theory that could cause an increase providing you have a high enough concentration. After 2 pm it starts to cool down all the way till sunrise with the peak heat input being less then an hour.

Once you remove or lower the heat source from under a blanket it cools down, the thicker the blanket the longer it takes, see water vapor in the tropics as above, again the concentration issue.

As CO2 can not generate heat we go with this one.

It appears that the theory is based on a starting point CO2 in 1850 at around 280ppm. Despite some people pulling the CO2 cord late 19 and early 20th centuries nature managed to auto correct these through its own cycles. All the way to the late 70’s when the readings were around 350/360 ppm. Then all of a sudden before the temp was showing any signs of going up and the whole world was still concerned about the coming ice age (manmade mind you) the first voices of Arrhenius came back to haunt us.

So up to 360 ppm when we were cooling but had a 30% odd increase in CO2 this for life vital chemical was not a problem but all of a sudden it was. Every ppm since has been weighing heavily on the minds of some and all of a sudden it was a major contributor to slowing down the heat loss.

By now we have a catastrophic additional 35 ppm, 10%, and nothing can stop the increasing temp, that should really read: increase the heat loss.

All the natural cycles with an unknown number of factors determining the climate, even if we just look at the last few centuries with warming and cooling well documented, are no match for 35 ppm CO2.

And some people find it strange I believe in nature doing what it has always done.

Which is not to say that we should not use our resources as frugal as possible lest we run out before real alternatives are established.