Zeke Hausfather is always trying to discredit my graphs showing NOAA data tampering, with one line of BS or another. One of his favorites is claiming that “changing station composition” causes a cooling bias to the US temperature record.

Where did Goddard go wrong?

Goddard made two major errors in his analysis, which produced results showing a large bias due to infilling that doesn’t really exist. First, he is simply averaging absolute temperatures rather than using anomalies.

Absolute temperatures work fine if and only if the composition of the station network remains unchanged over time.

-

Zeke Hausfather

The Blackboard » How not to calculate temperature

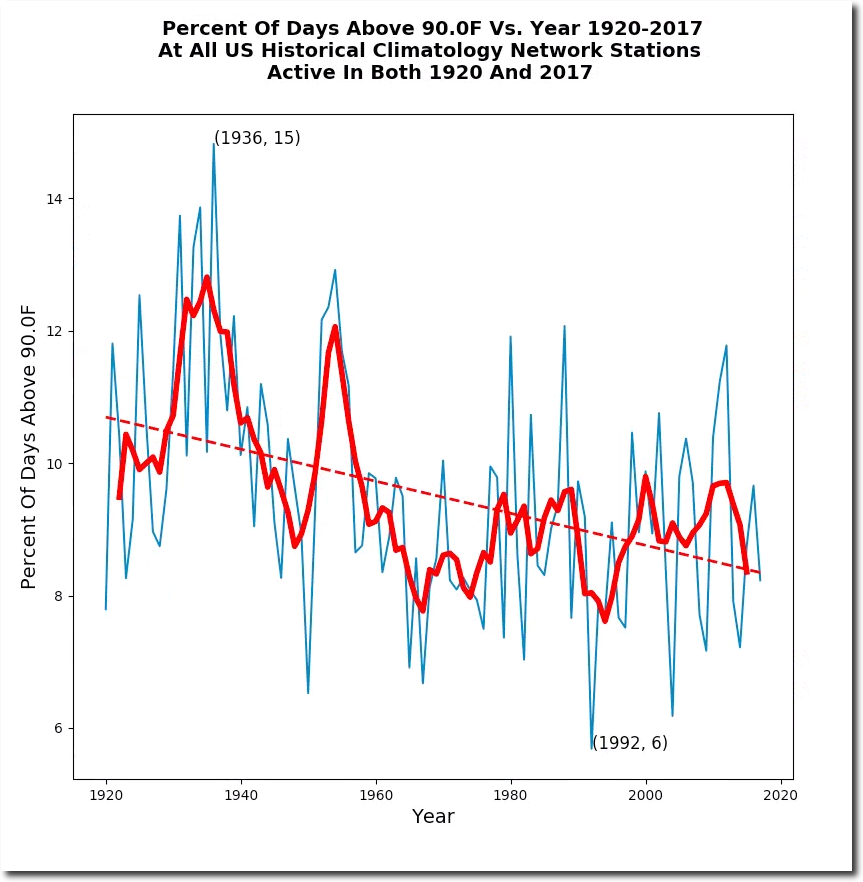

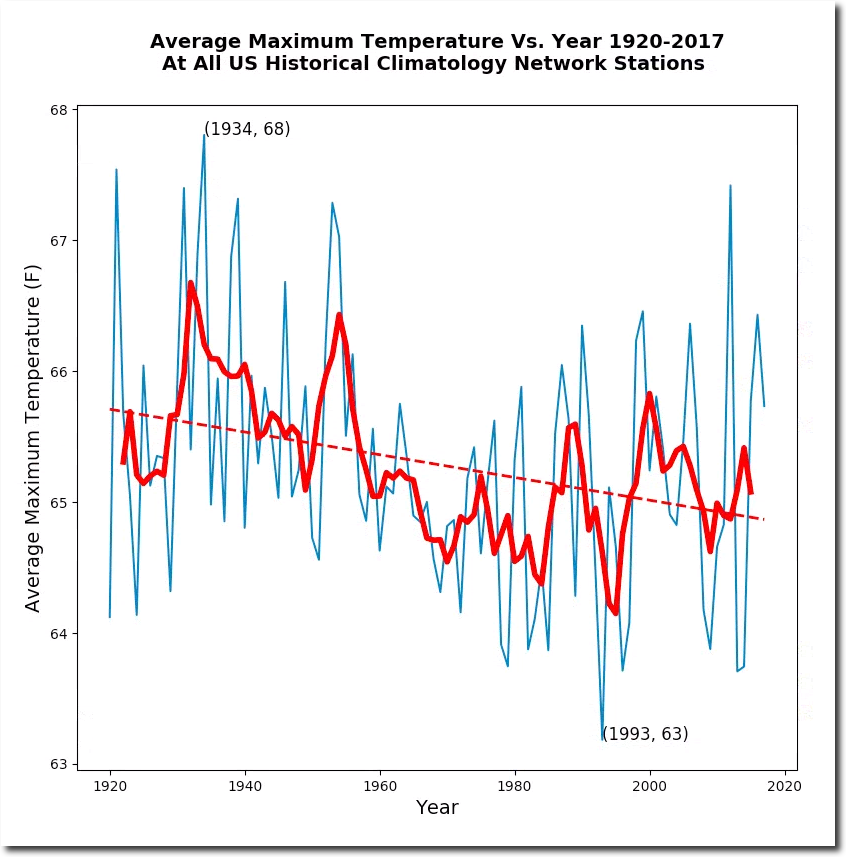

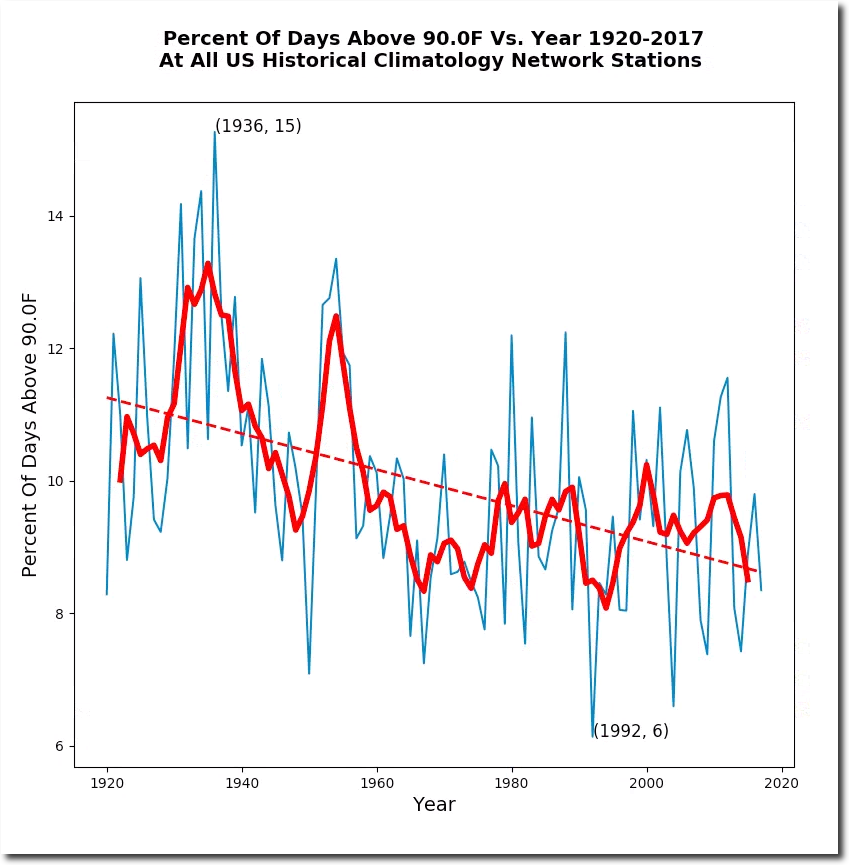

It is simple enough to test his theory, by using only the stable set of 720 stations which have been continually active since 1920.

The trend for the stable set of stations above, is almost identical to the trend for all 1218 stations below.

The difference in the trends between the two groups of stations is very minor, and no matter how Zeke tries to distract attention with his BS – the US used to be much hotter and the NOAA data tampering is fraudulent.