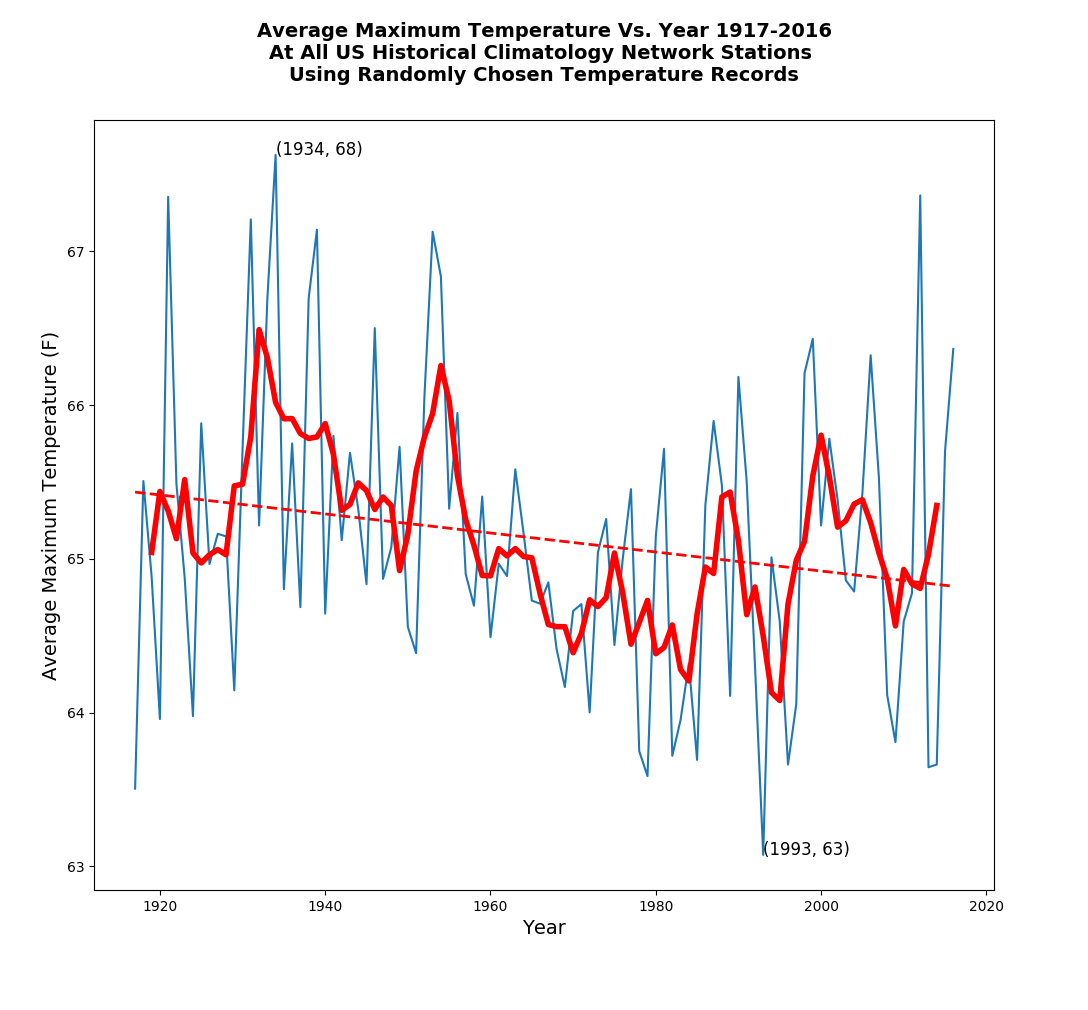

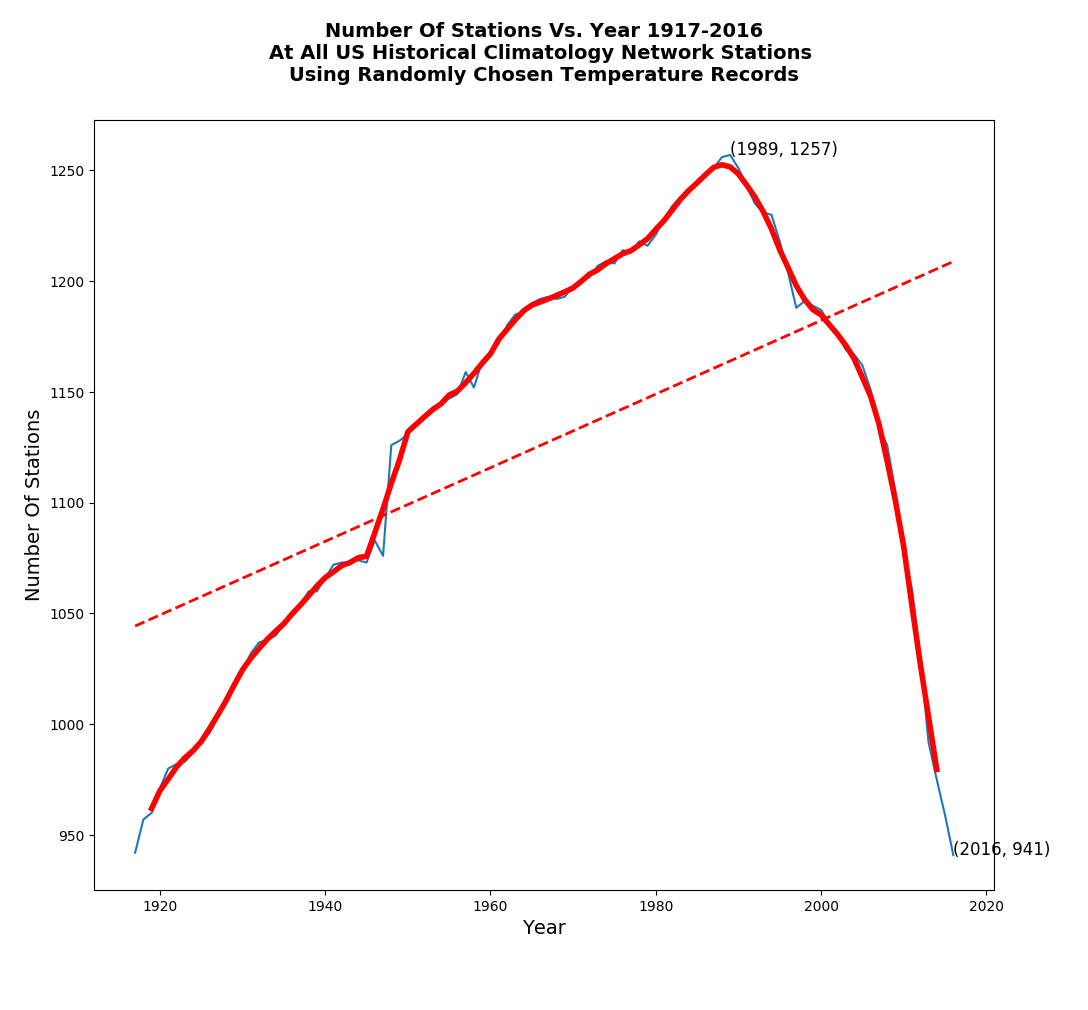

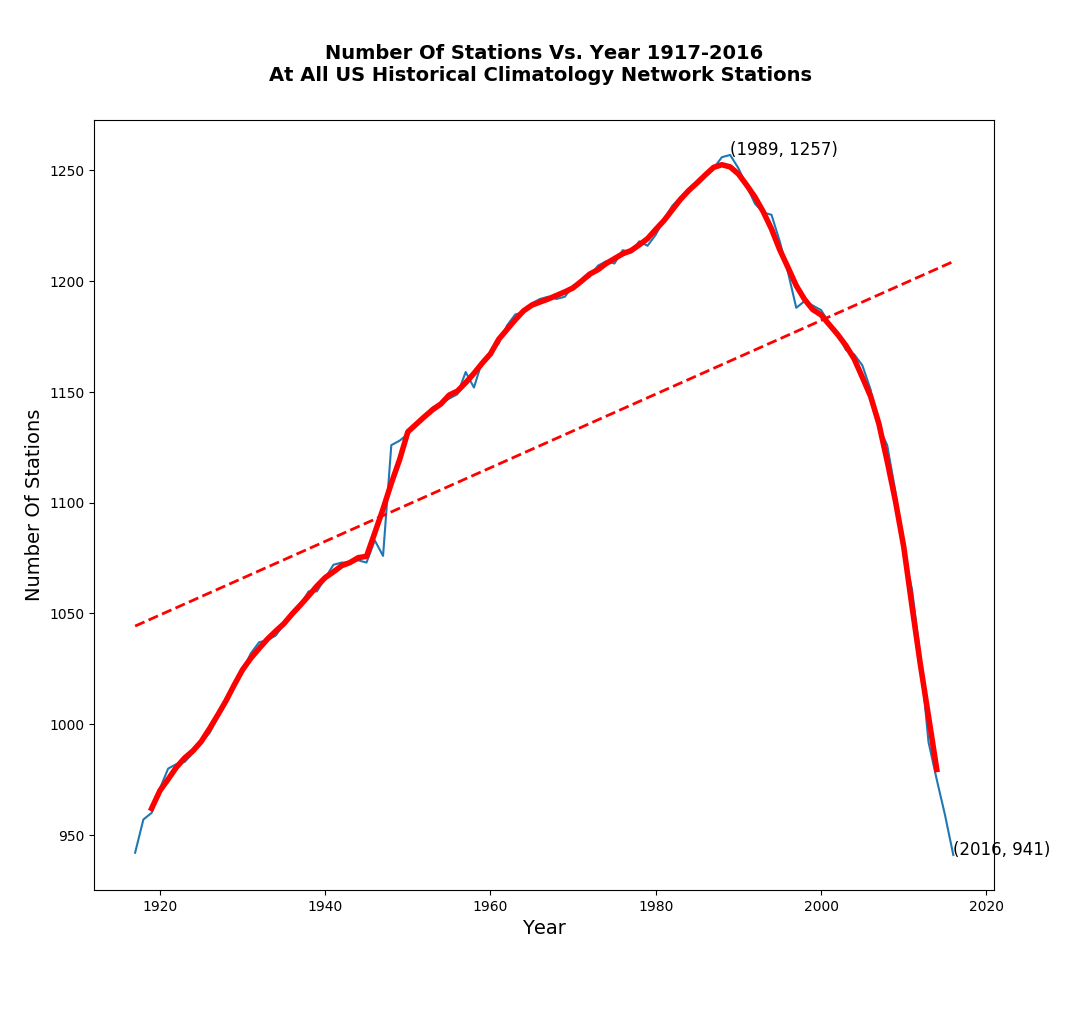

Nick and Zeke’s favorite justification for NOAA data tampering, which turns US cooling into warming – is “changing station composition” – i.e. the set of USHCN stations isn’t identical from year to year. In this post I examine that rationalization.

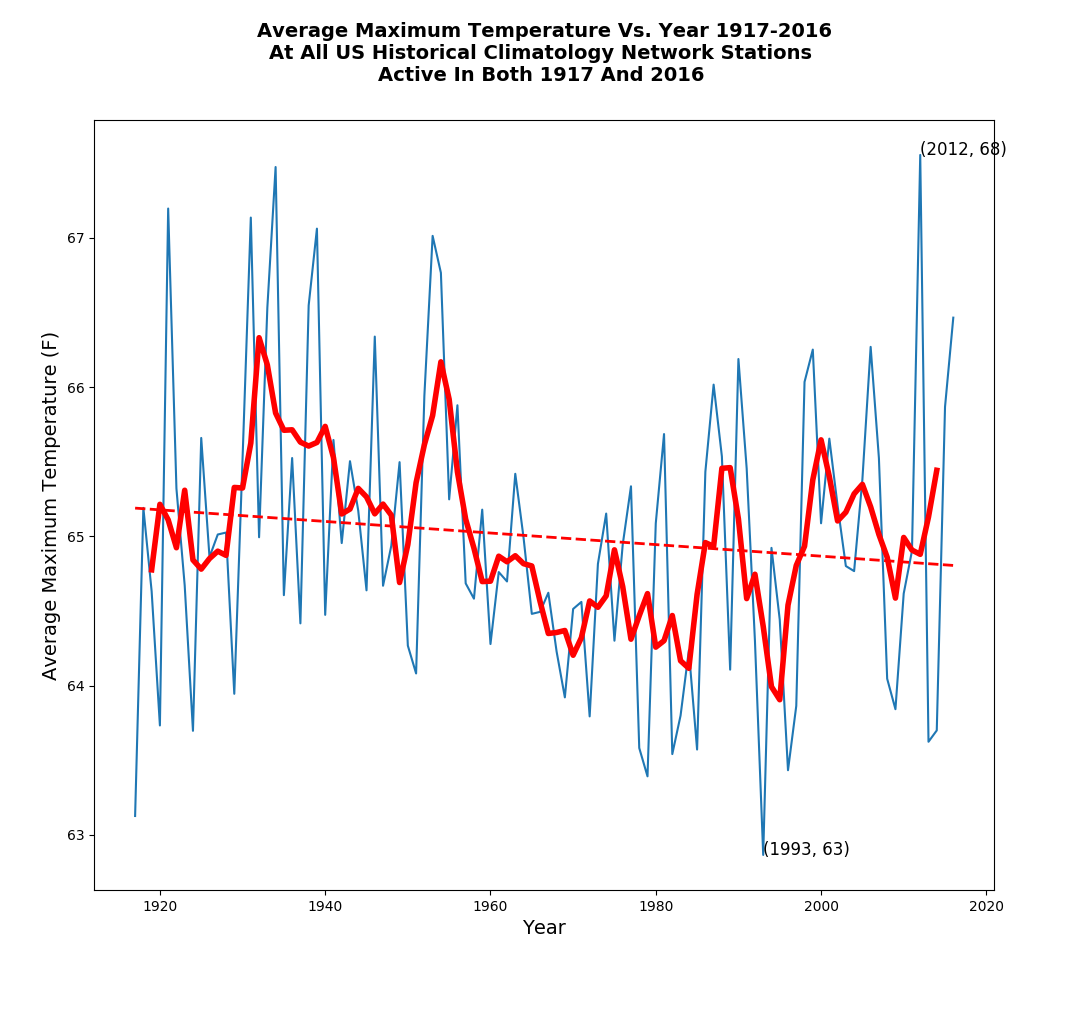

Rather than attempting to adjust the temperatures, let’s do a much more rigorous experiment – and simply use a set of stations which haven’t changed. There are 747 US stations which were continuously active over the past century. Examining them, they show exactly the same pattern as the set of all stations.

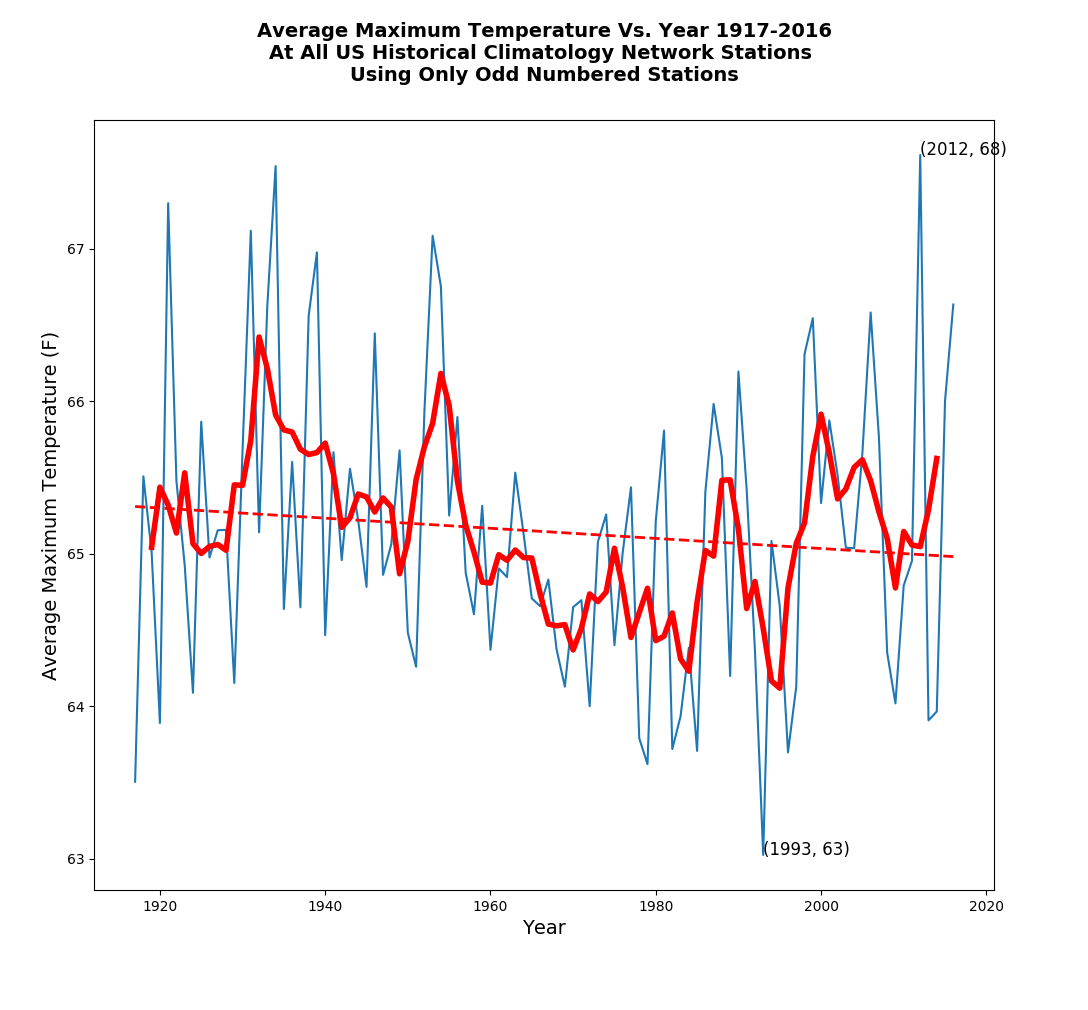

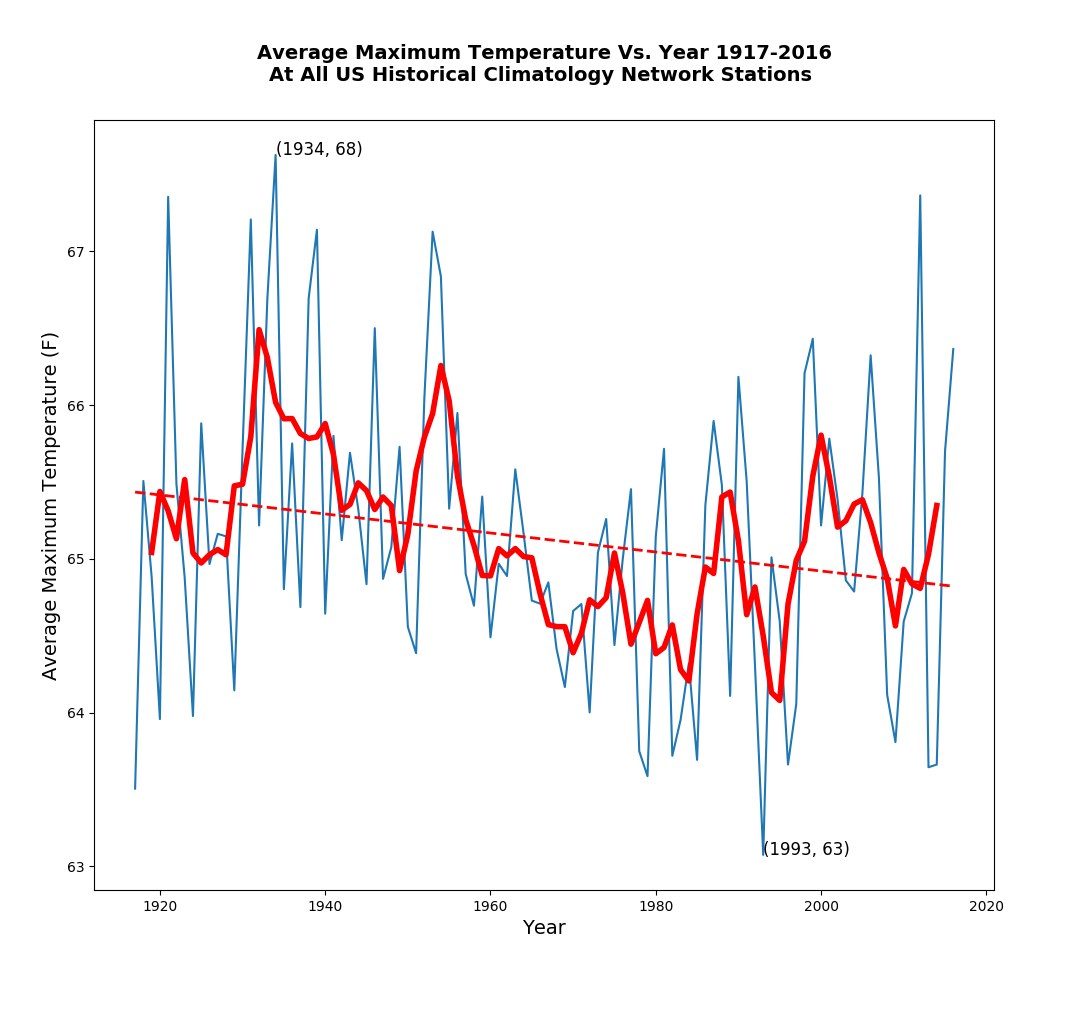

Using the set of 747 unchanging stations, maximum temperatures have declined over the past century – just like they do in the set of all stations.

Here is the equivalent NOAA “adjusted” graph for all stations. NOAA has turned cooling into warming via data tampering.

Climate at a Glance | National Centers for Environmental Information (NCEI)

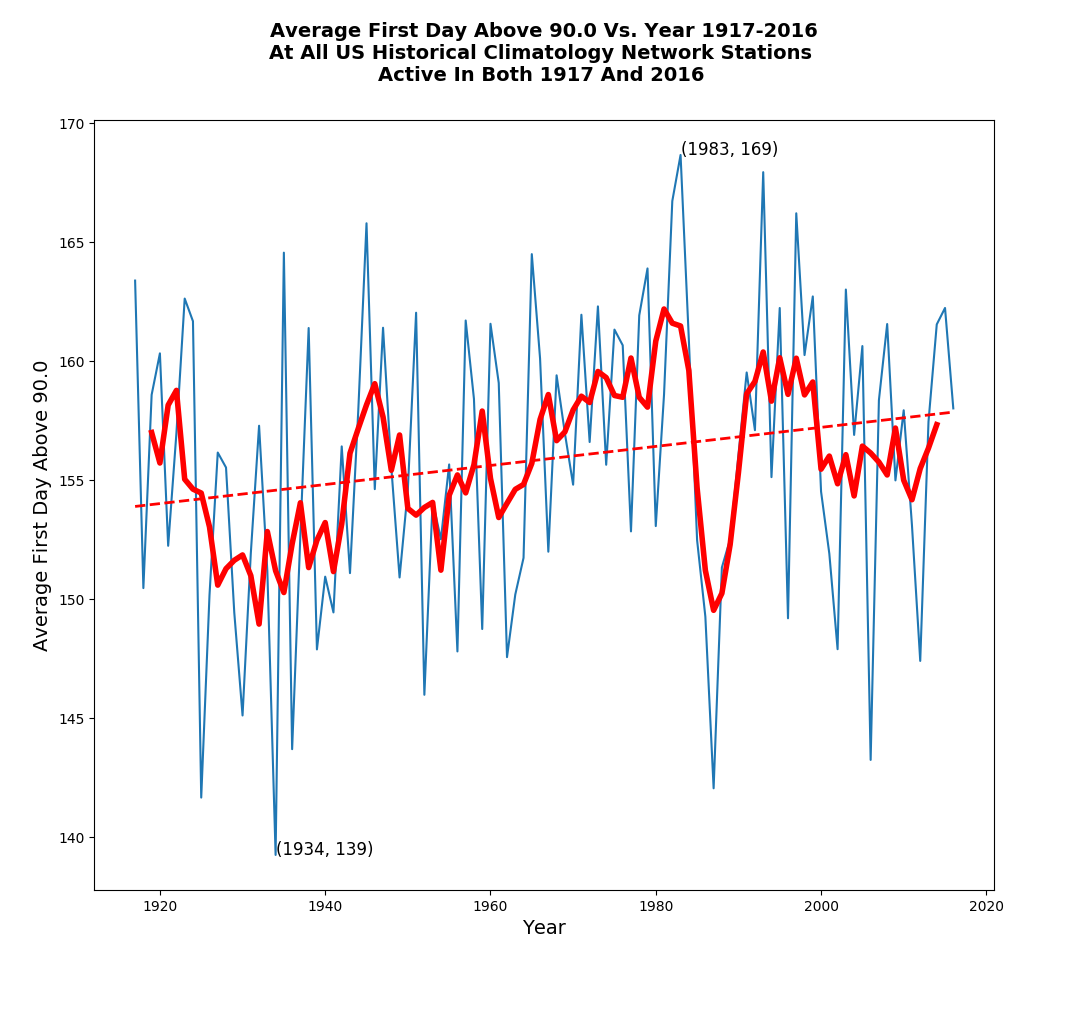

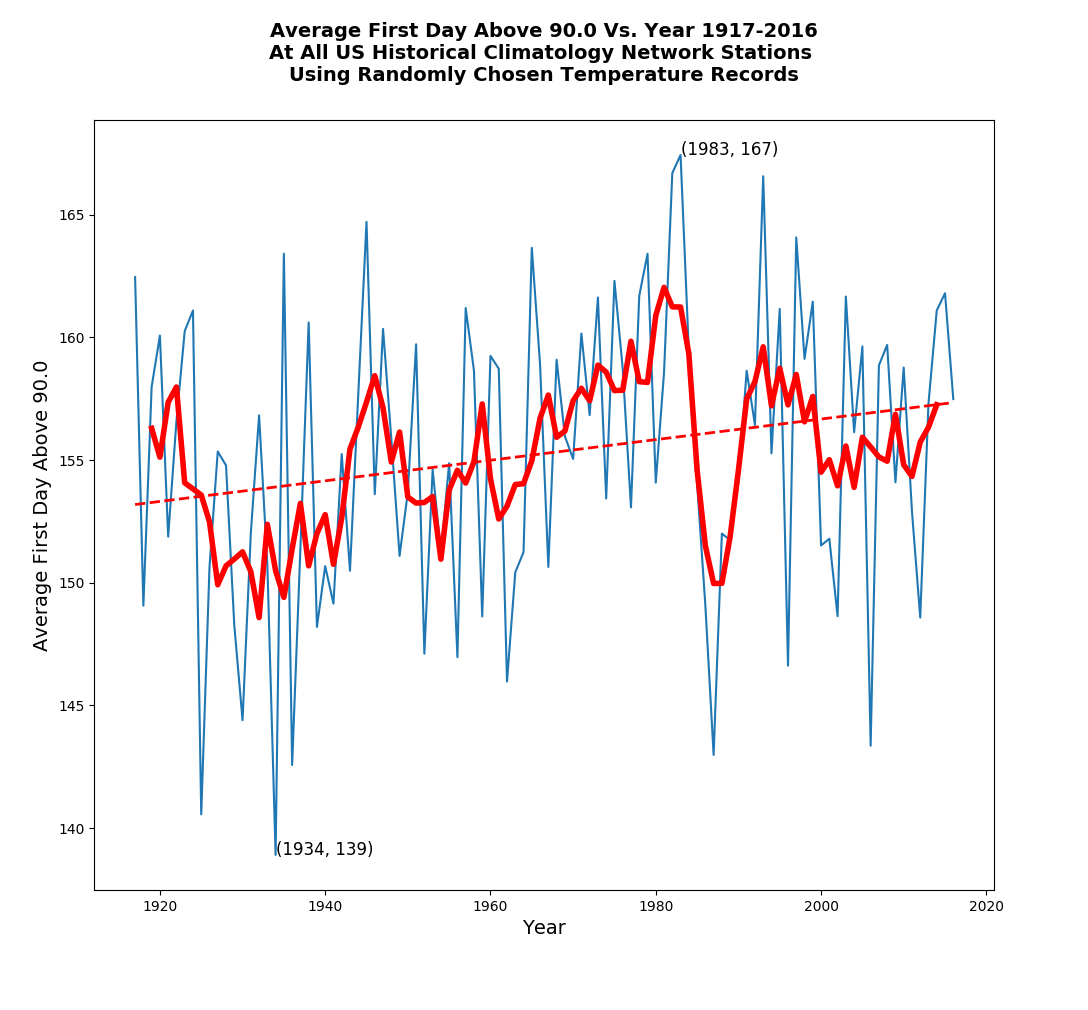

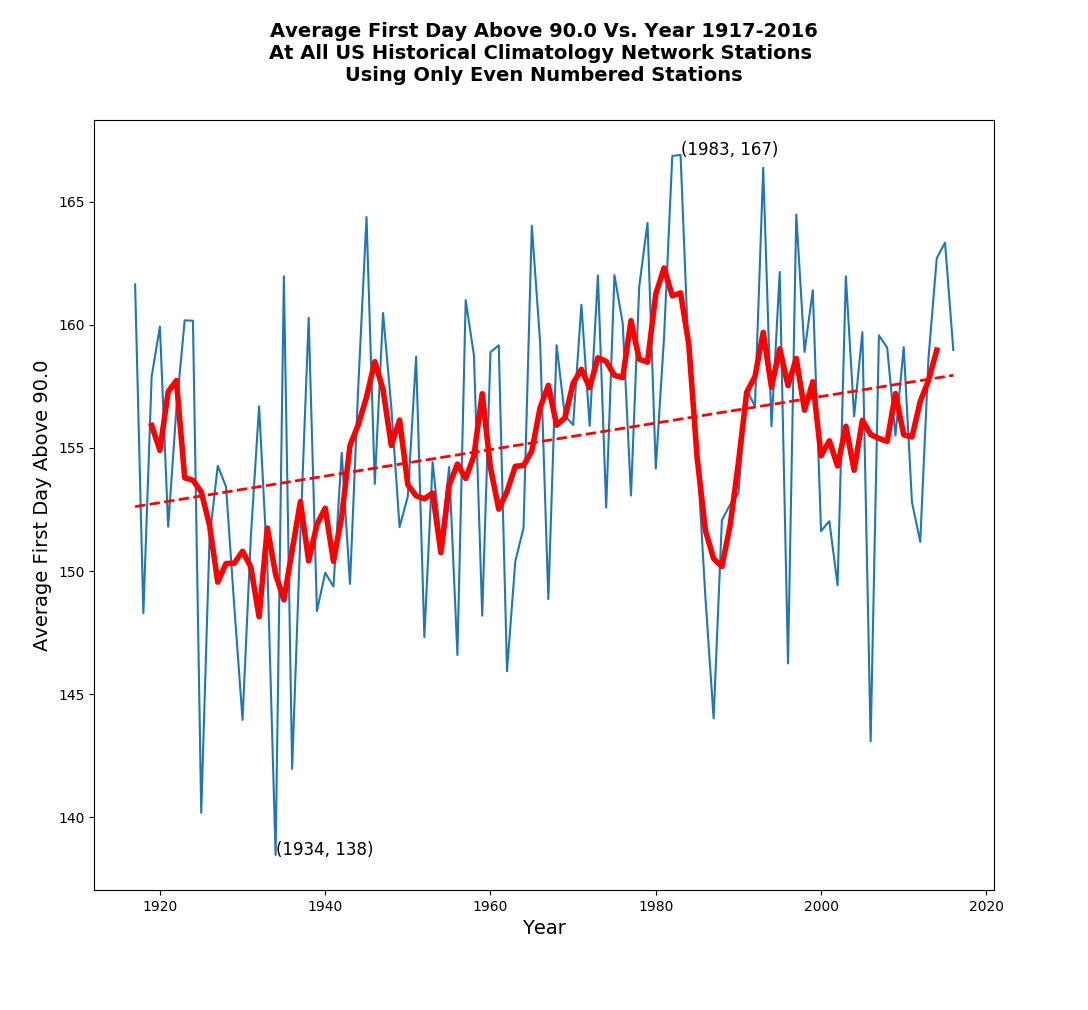

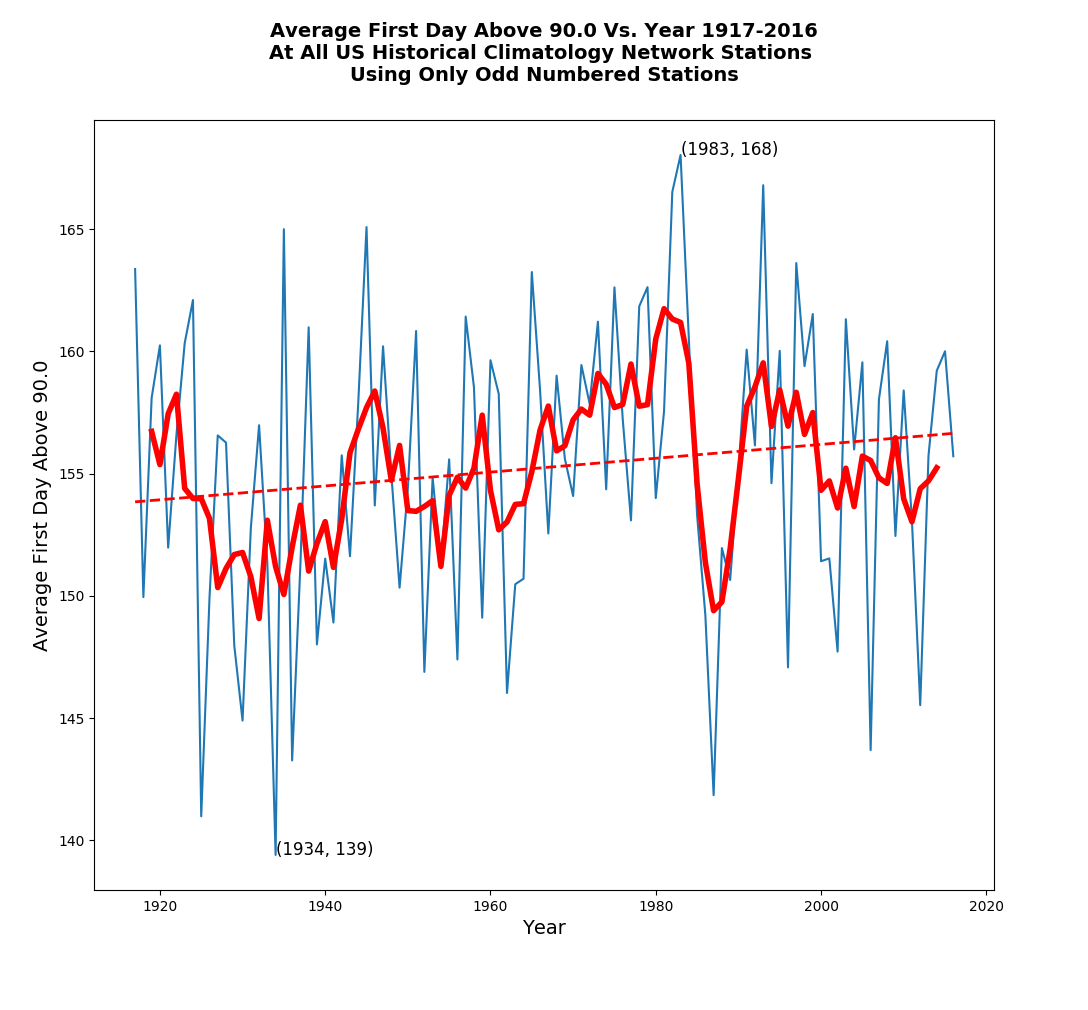

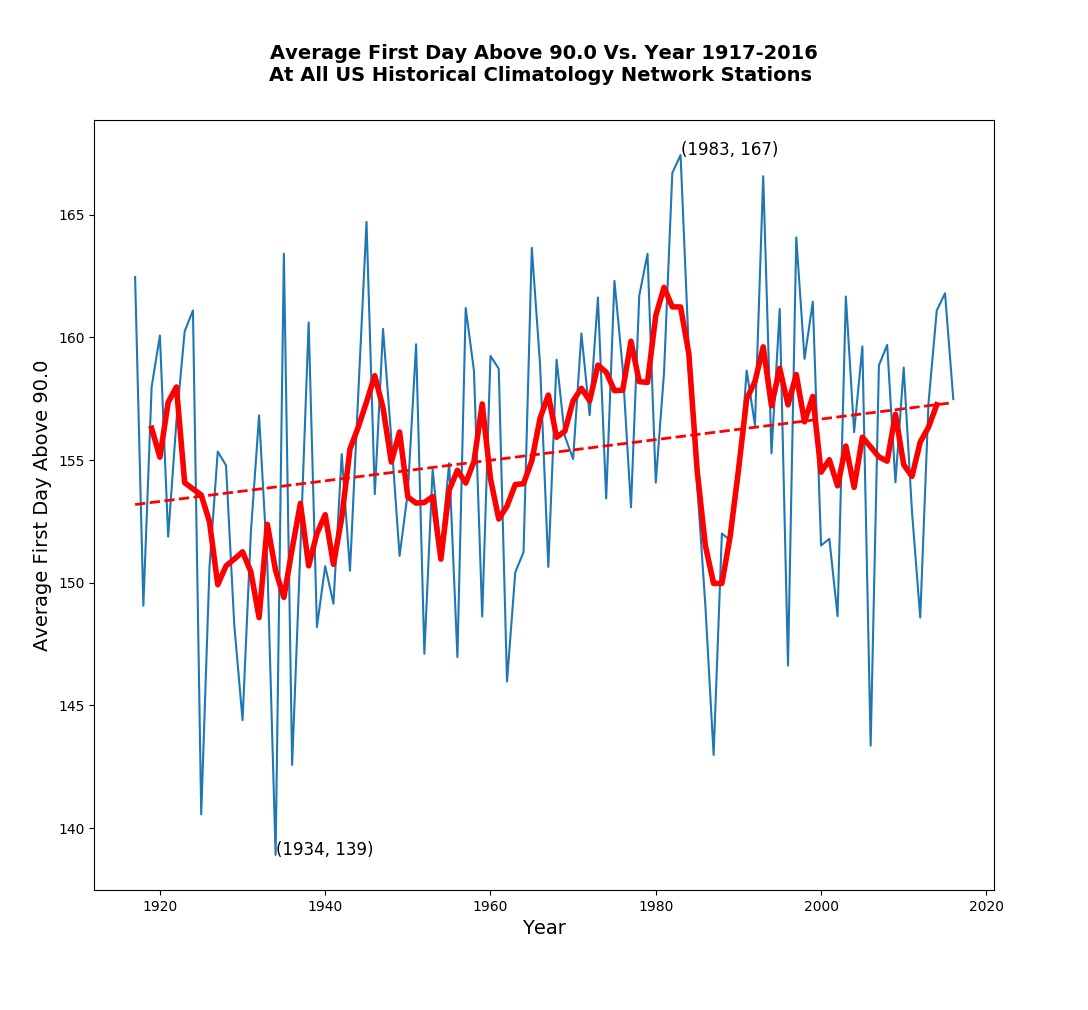

Looking at the set of 747 unchanging stations, the first hot day of summer is coming later.

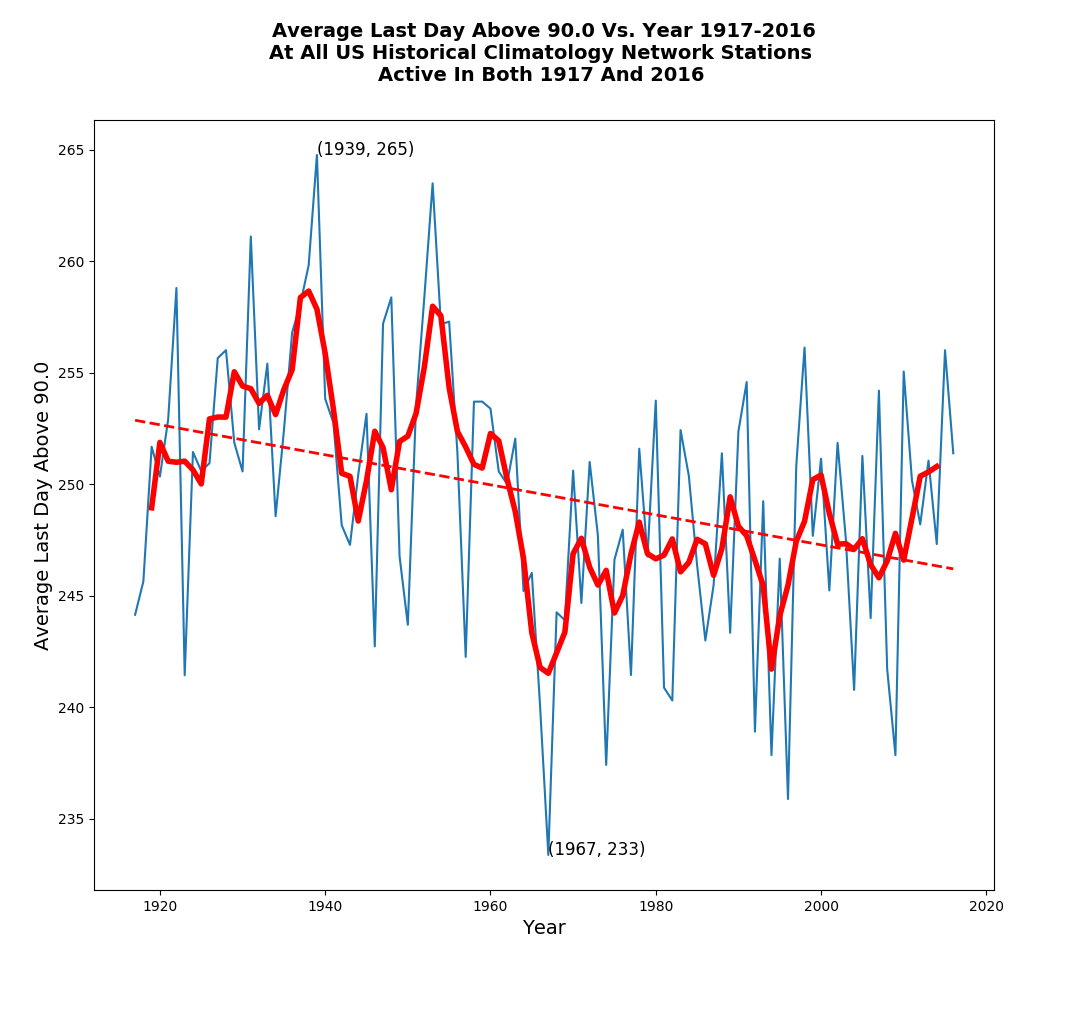

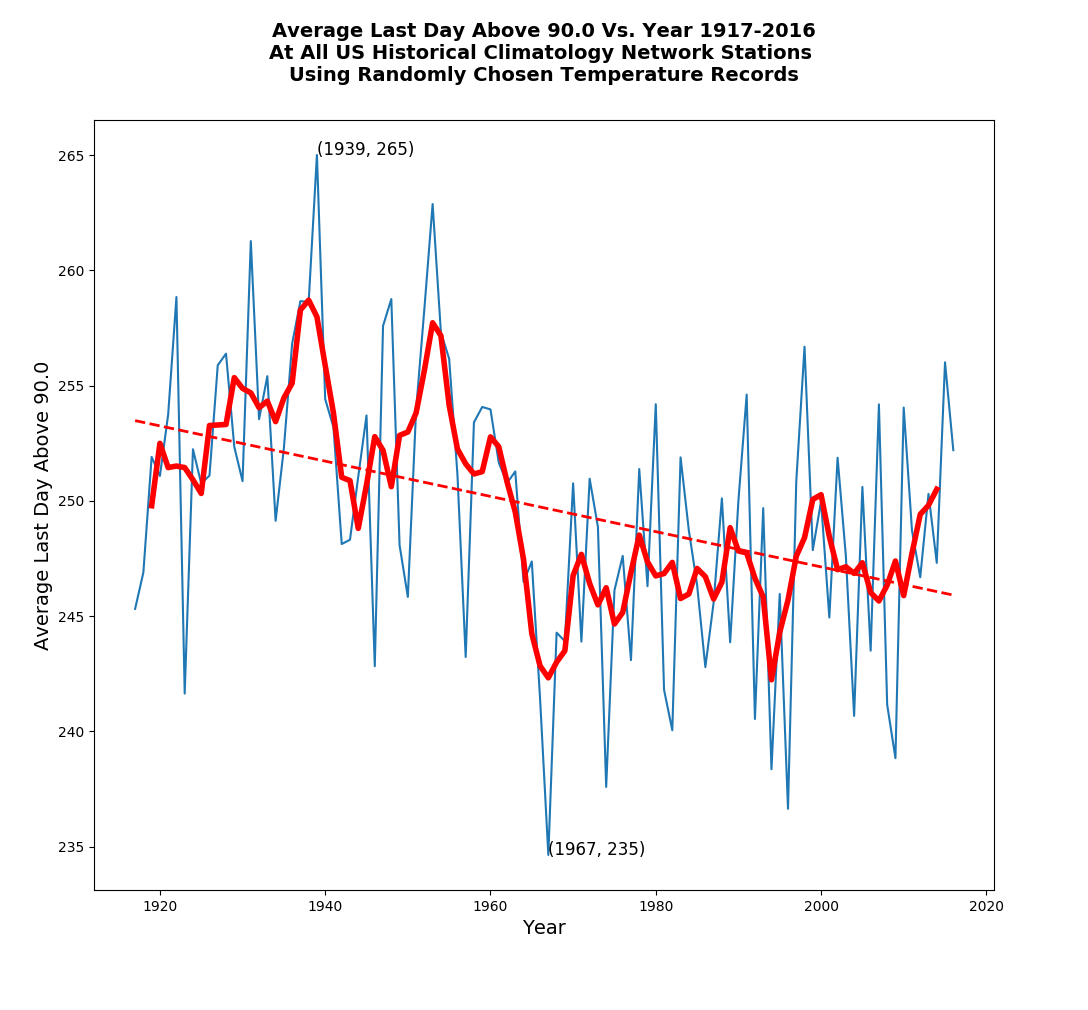

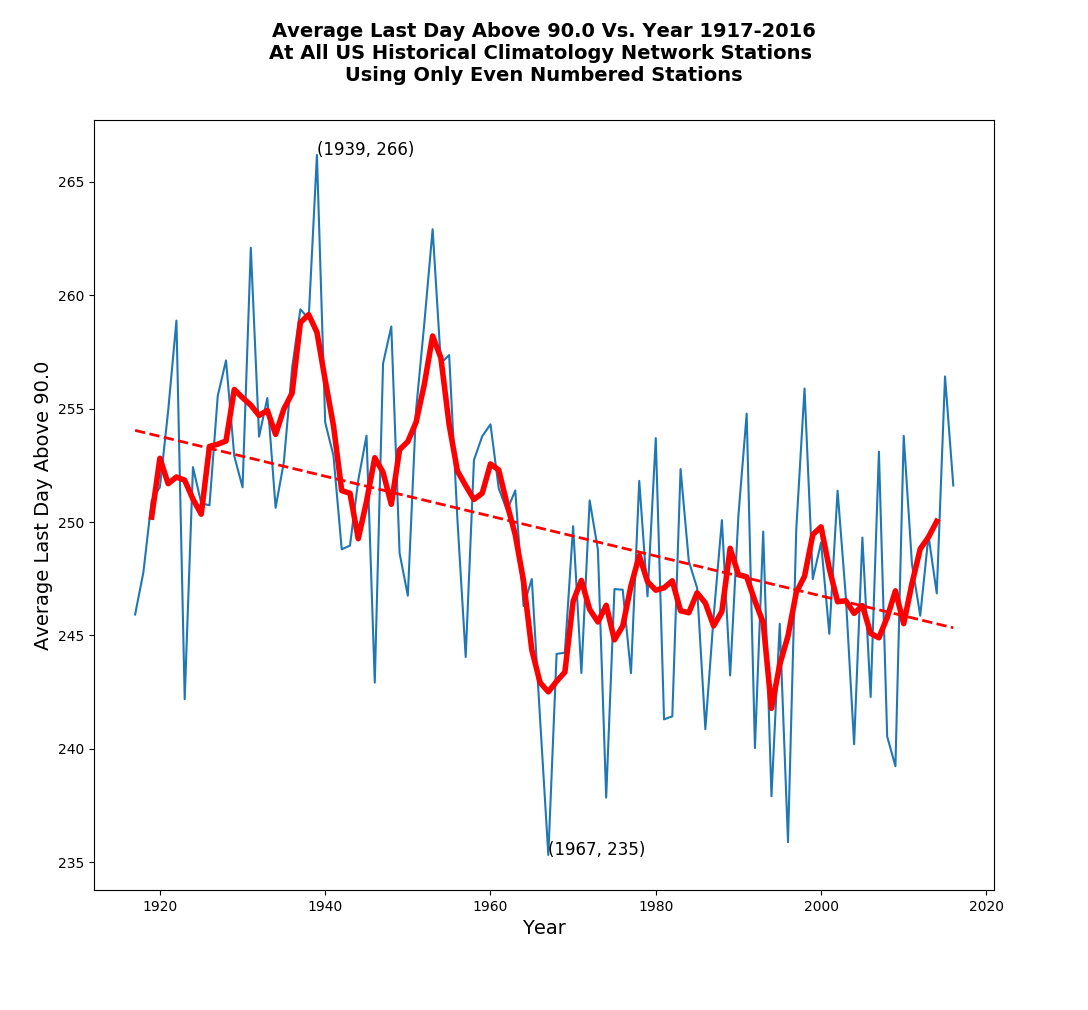

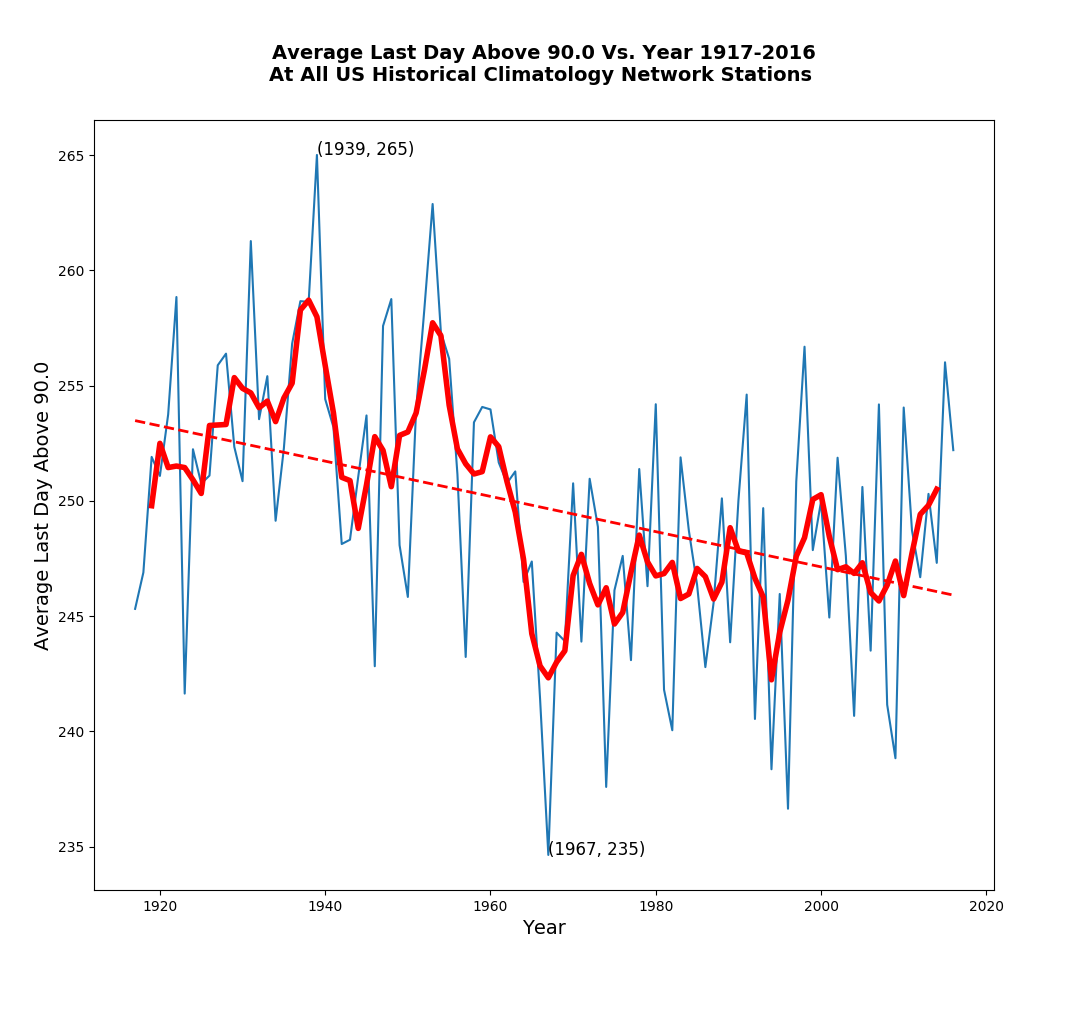

The last hot day of summer is coming earlier.

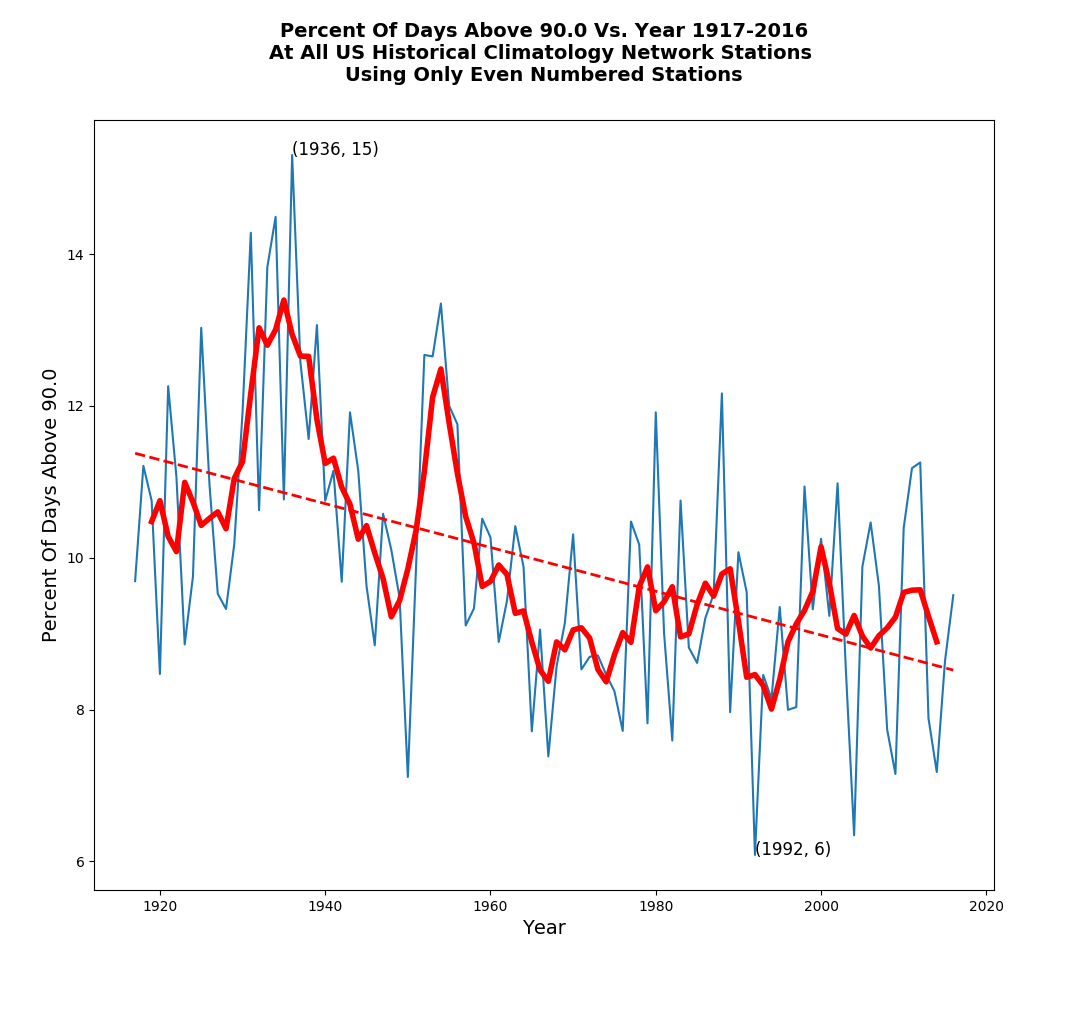

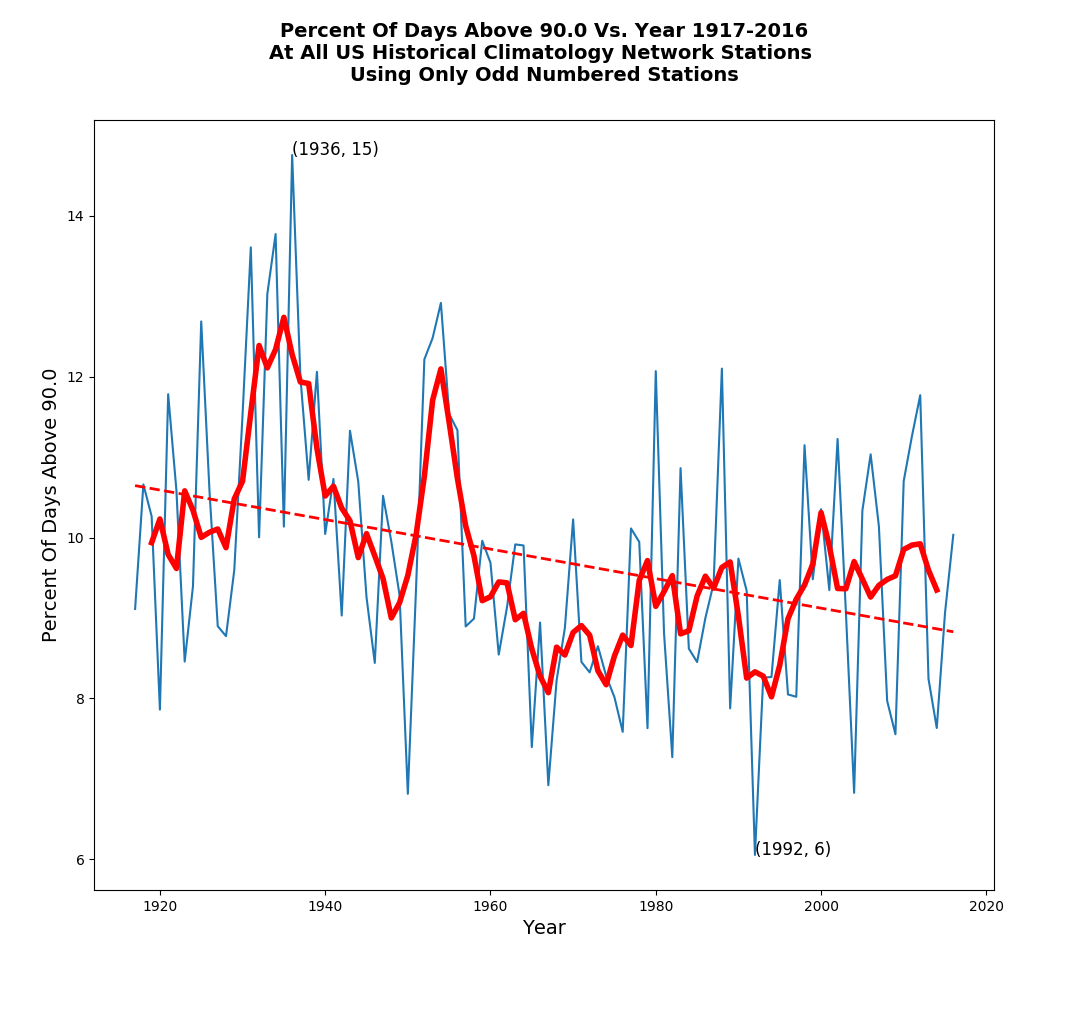

The frequency of hot days is declining.

We can already see that the adjustments are garbage. But lets’s proceed.

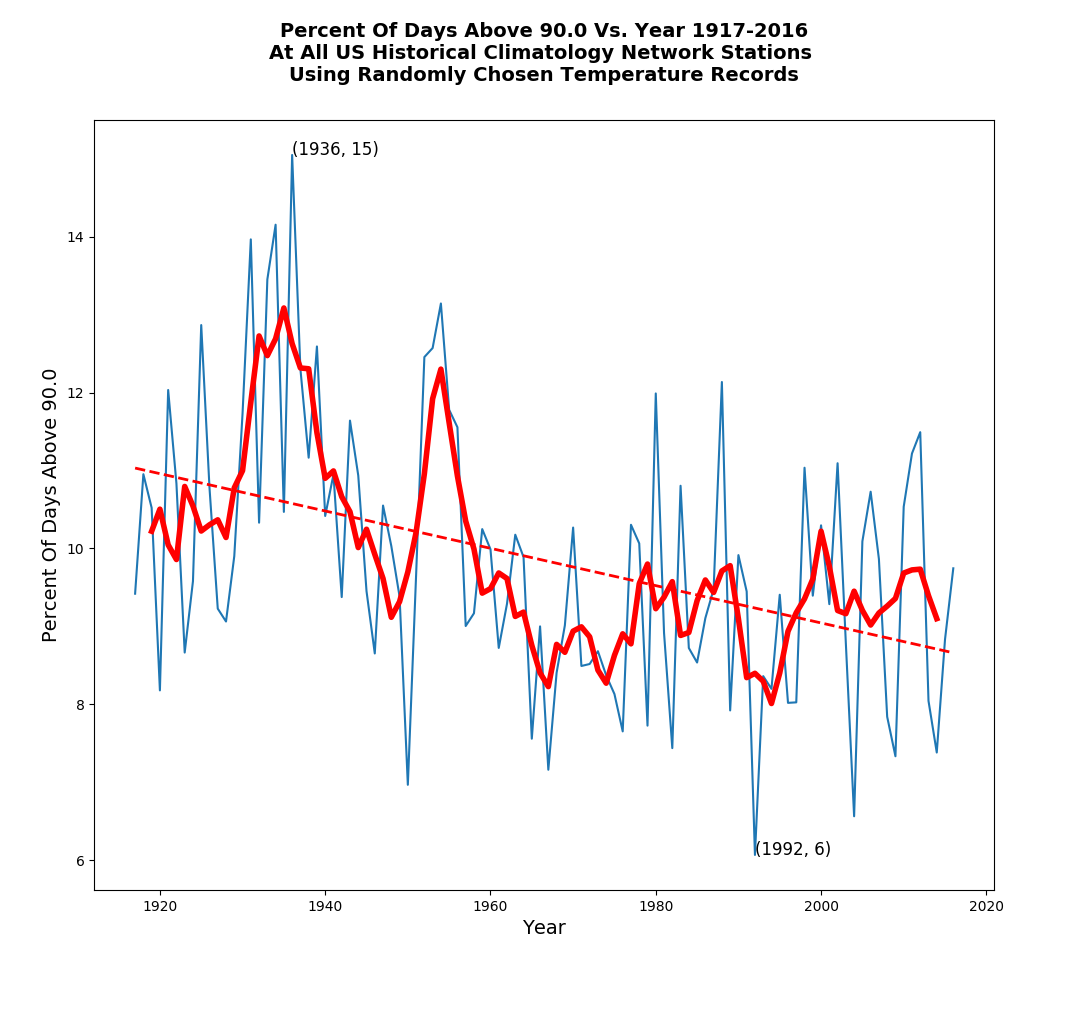

Now let’s try using a set of monthly data which is randomly chosen from month to month, so the station composition is changing dramatically every month. This experiment shows the exact same patterns as the set of all stations, indicating that the USHCN raw data is very robust, and changing station composition has little impact on patterns.

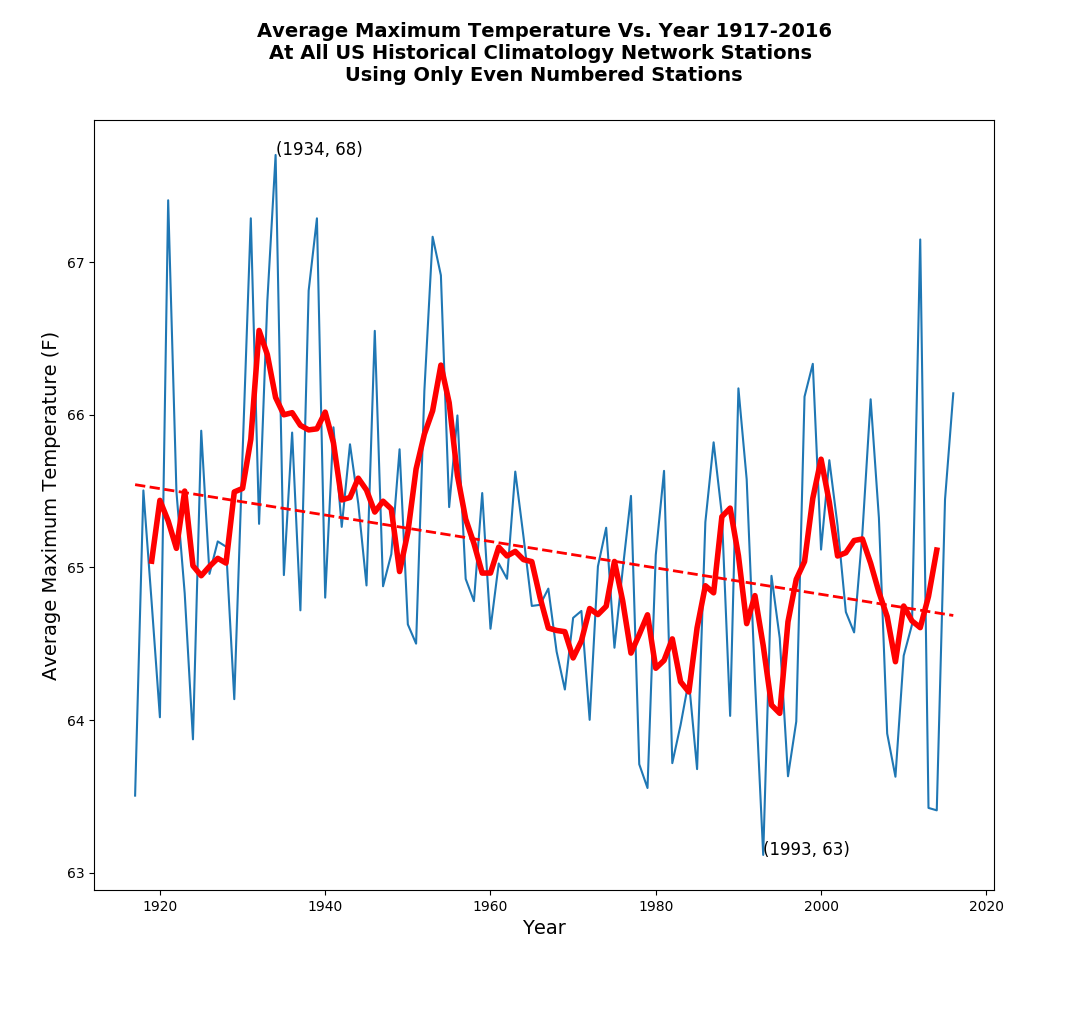

Now let’s try using only even numbered USHCN stations. Again, we see the same pattern as the set of all stations.

Now let’s do the same thing for odd numbered stations. Again, the same pattern.

Finally, let’s look at the same set of graphs for all USHCN stations. Again, exactly the same patterns.

In the past, Gavin Schmidt at NASA has stated that we don’t need very many US stations to make a robust temperature record – and he was correct. The USHCN stations were chosen precisely because they were robust.

Obviously there are deterministic ways we could force changing station composition to impact the trend (like intentionally removing southern states after the year 1970) – but the random changes to USHCN station composition over time have very little impact on the trend. Nick and Zeke are simply using that as a smokescreen for NOAA to hide their data tampering fraud.