Zeke and Nick and all the other apologists for NOAA data tampering claim that adjustments to US temperature are due to Time Of Observation Bias or some other sciencey sounding reason, But the reality is that the data is simply fake.

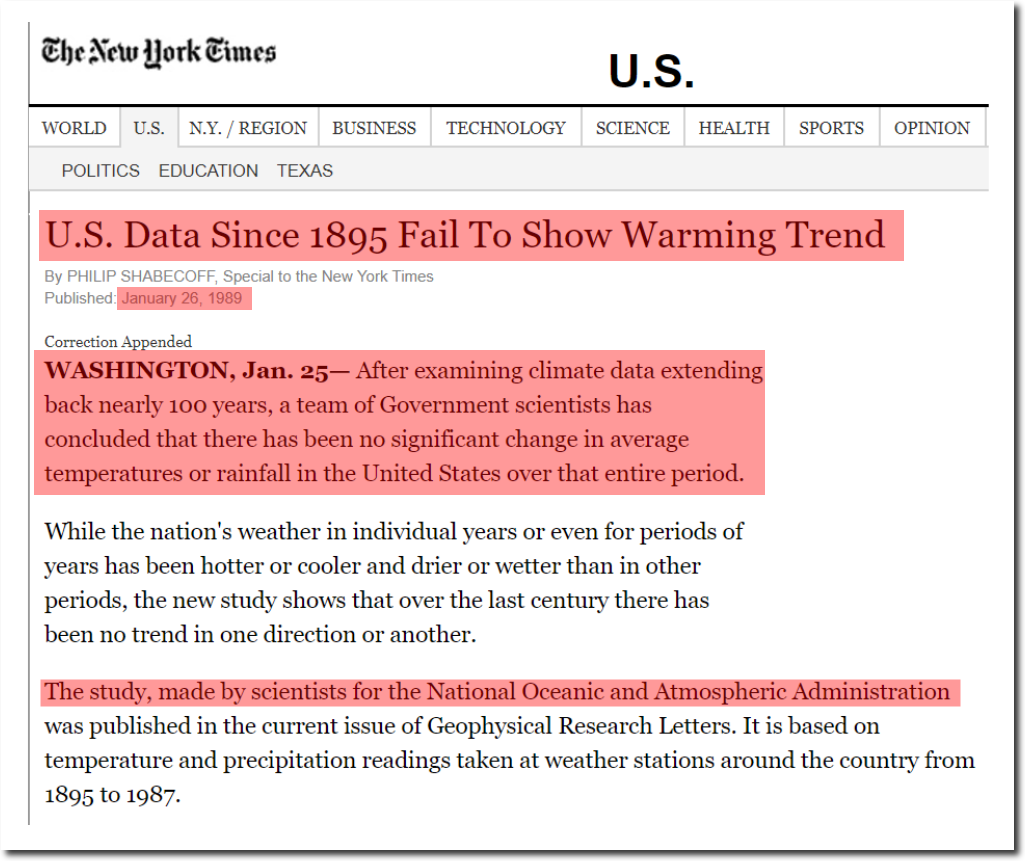

In 1989, NOAA reported no US warming since 1895.

U.S. Data Since 1895 Fail To Show Warming Trend

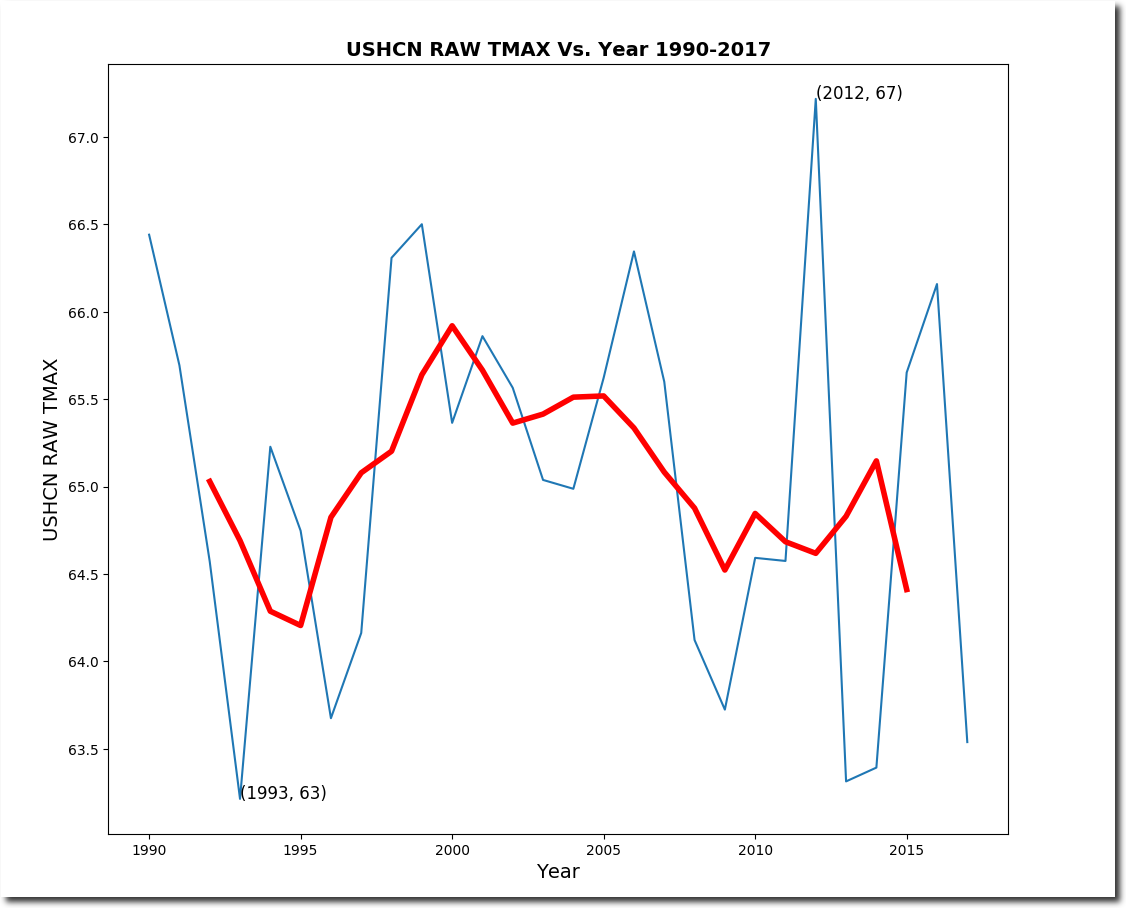

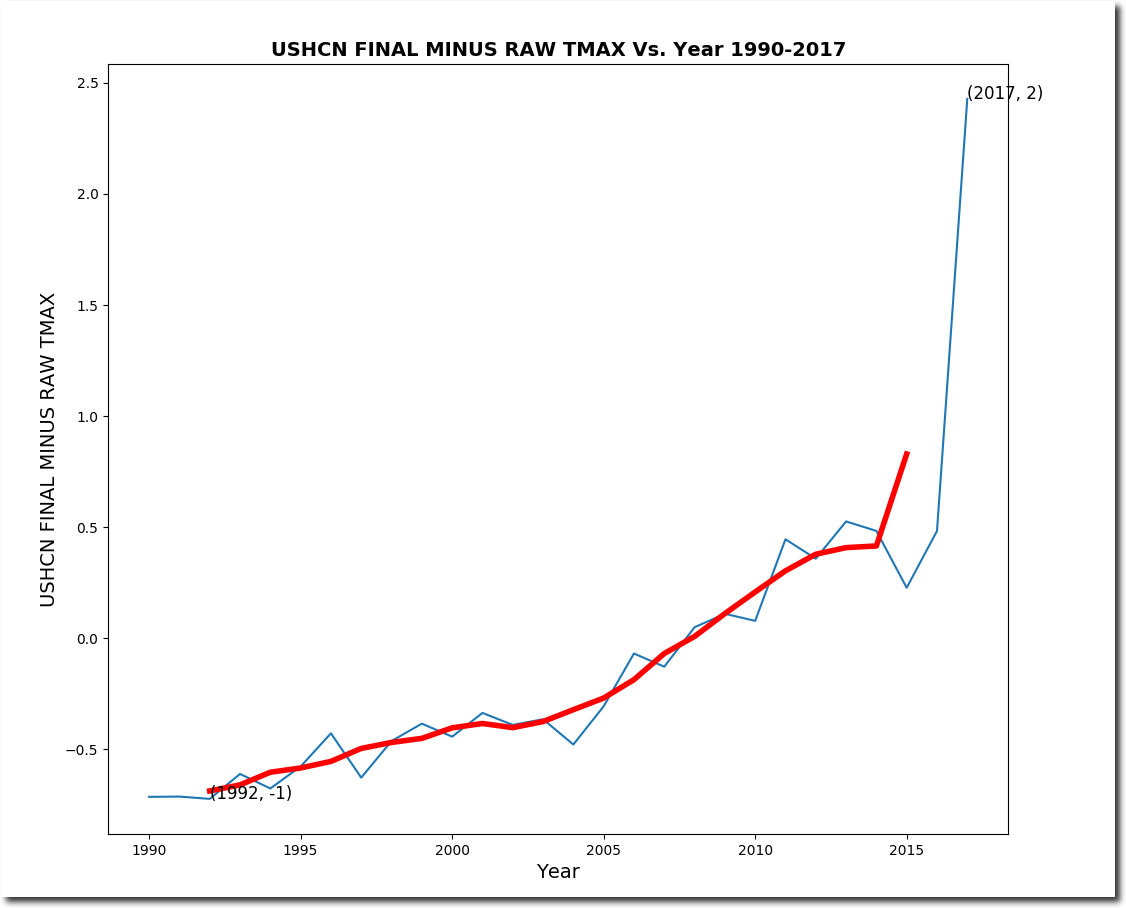

So what has been happening since 1990? The measured NOAA data shows no warming.

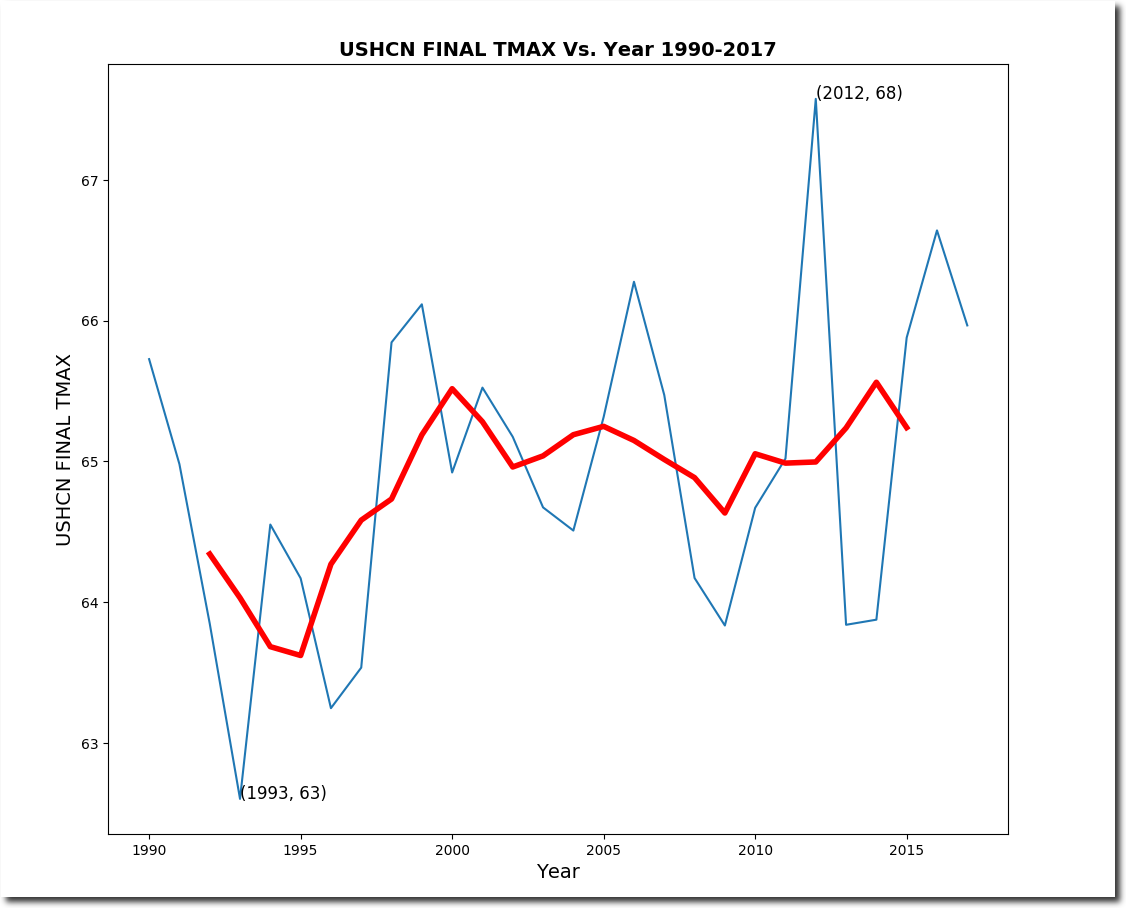

But the adjusted data shows a lot of warming.

The adjustments form a familiar hockey stick of data tampering.

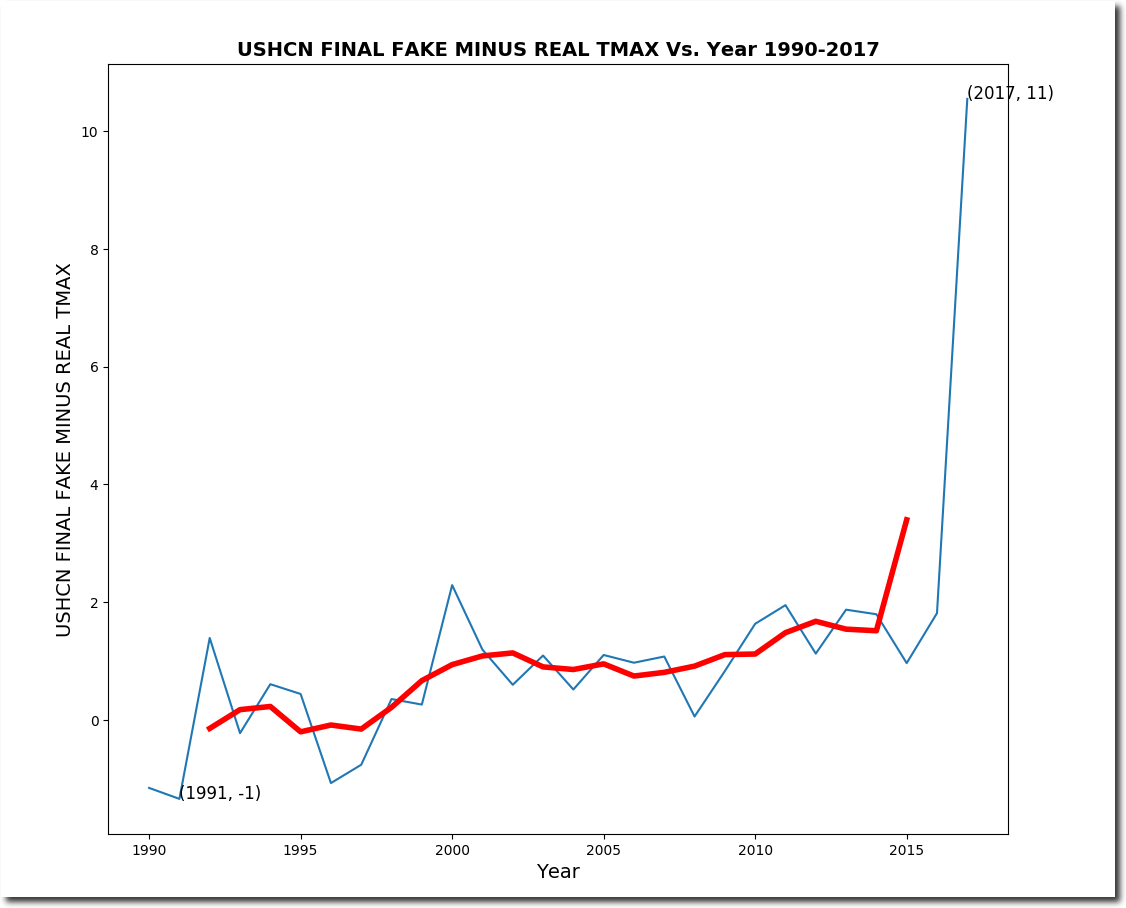

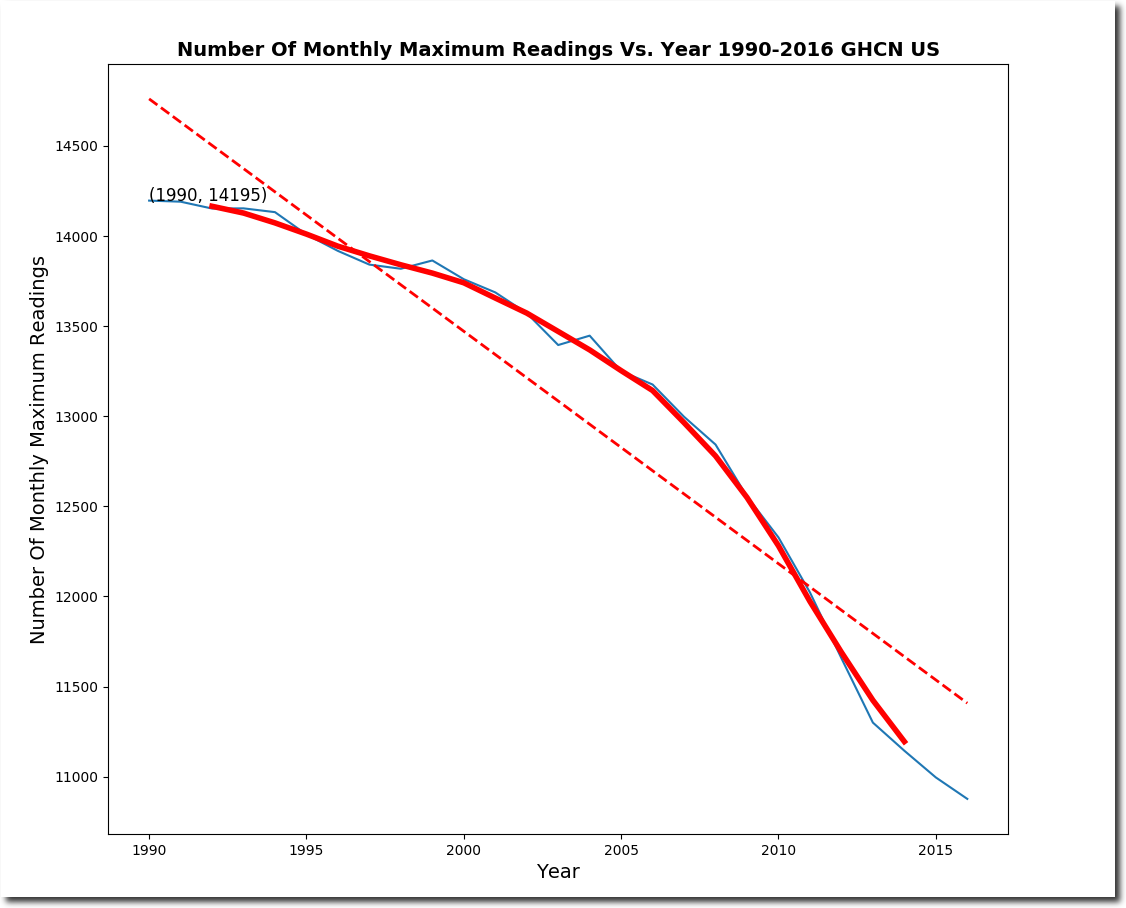

Let’s look at what NOAA is doing to make this happen. Since 1990, NOAA has been losing monthly temperature data at a phenomenal rate. When they don’t have real data, they simply make up fake data. Almost 50% of their “adjusted” data is now fake, but in 1991 it was only 16% fake.

I split up the NOAA adjusted (Final) data into two categories – “real” data has underlying thermometer data, and “fake” data has no underlying thermometer data. The “real” adjusted data shows very little warming.

Bu the fake adjusted data shows lots of warming.

The infilling of fake adjusted data (no underlying thermometer data) corrupts the US temperature data in a spectacular hockey stick.

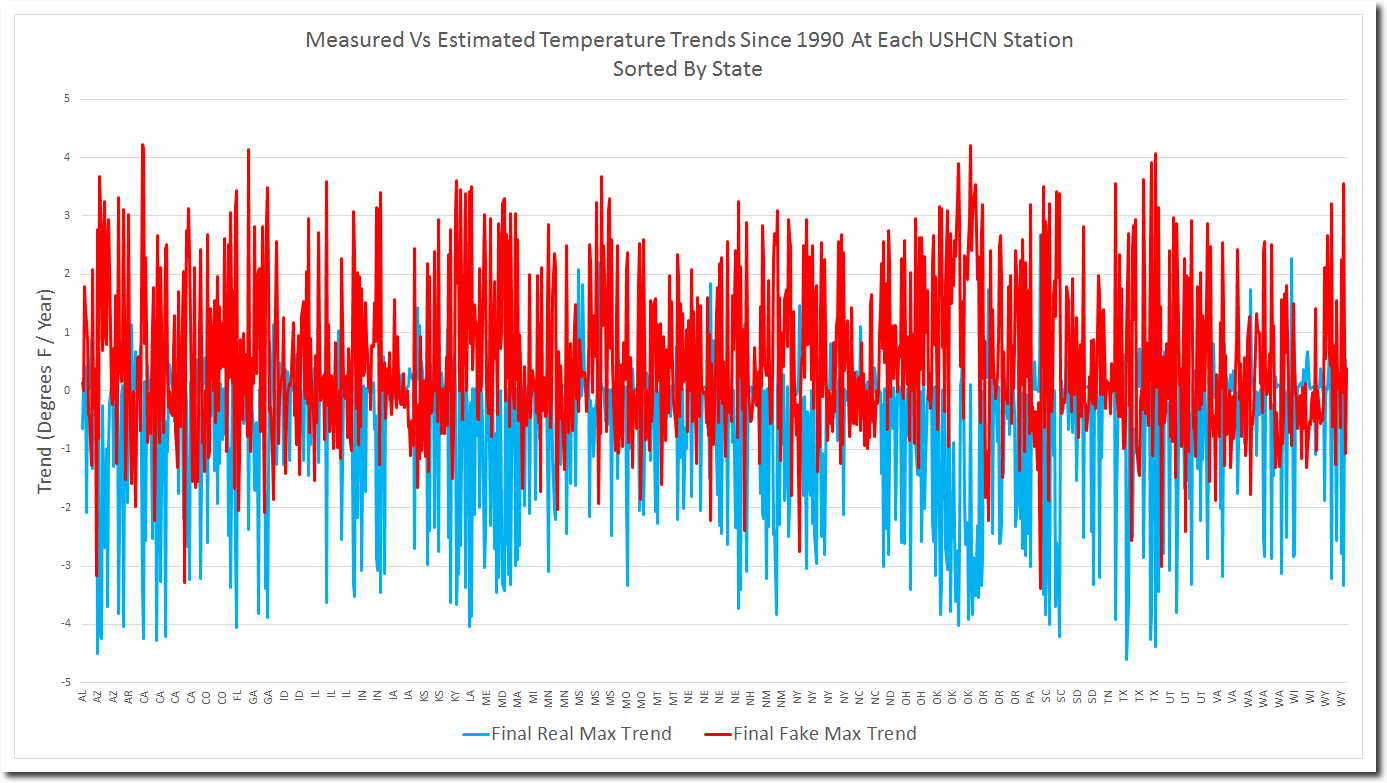

But it is much worse than it seems. The graph below plots the real adjusted (blue) and fake adjusted (red) trends for all 1200 USHCN stations. NOAA is consistently infilling missing cold temperatures with fake warm temperatures, across the board and across the country. How cynical can they get?

When confronted about their data tampering, they say “our algorithm works exactly as designed.”

In other words, the fraud is intentional. But I’m not done yet. The fraud is worse than it seems (if that is possible.) Almost 50% of USHCN adjusted data is now fake, but they have only lost 25% of their data. So they are throwing out a large percentage of their measured data.

The next phase of my discovery process will be to find out what type of measured data they are throwing out and replacing with fake data. But it seems a safe bet that they are tossing measured cold data, and replacing it with fake hot data.

This is the biggest and most cynical scam in science history.